1.2.1. Methane satellite observations completeness assessment for greenhouse gas monitoring#

Production date: 03-09-2025

Produced by: Davide Putero (CNR), Paolo Cristofanelli (CNR)

🌍 Use case: Using satellite observations for quantifying global trends and uncertainties in greenhouse gas concentrations#

❓ Quality assessment questions#

How can the variability of spatial and temporal data coverage affect the quantification of the long-term atmospheric methane trends by satellite measurements (XCH\(_4\) Level 3 gridded product)?

What are the uncertainties related to the XCH\(_4\) satellite observations?

Methane (CH\(_4\)) is the second most important anthropogenic greenhouse gas after carbon dioxide (CO\(_2\)), representing about 19% of the total radiative forcing by long-lived greenhouse gases [1]. Atmospheric CH\(_4\) also adversely affects human health as a precursor of tropospheric ozone [2]. Monitoring the long-term CH\(_4\) variability is therefore crucial for monitoring the emission reductions [3]. In this assessment, atmospheric CH\(_4\) spatial seasonal means and trends are analysed using the XCH\(_4\) Level 3 gridded product (Obs4MIPs, version 4.5 [4]), by adopting an approach similar to [5]. Furthermore, we also evaluate the uncertainty associated with this dataset.

The Obs4MIPs XCH\(_4\) product is generated by spatial (5°x5°) and temporal (monthly) gridding of the corresponding EMMA Level 2 data product. For more details see [6]. By analysing an ensemble of different Level 2 datasets from various retrieval algorithms, the EMMA algorithm generates a dataset containing XCH\(_4\) from individual retrievals. In particular, for each month and 10°×10° grid box, the algorithm with the grid box mean closest to the median is selected. The list of the considered retrieval algorithms is available from [6]. The various retrieval algorithms are optimised for different instruments (SCIAMACHY, GOSAT, GOSAT-2) which measure backscattered solar radiation in the near-infrared for O\(_2\) as well as the absorption bands of CO\(_2\) or CH\(_4\).

Code is included for transparency but also learning purposes and gives users the chance to adapt the code used for the assesment as they wish. Users should always check product documentation and associated peer-reviewed papers for a more complete reporting of the issues discussed here (e.g., [7], [8]).

📢 Quality assessment statements#

These are the key outcomes of this assessment

The dataset “Methane data from 2002 to present derived from satellite observations” can be used to evaluate CH\(_4\) mean values, climatology and growth rate over the globe, hemispheres or large regions.

Caution should be exercised in certain regions (high latitudes, regions with frequent cloud cover, oceans) where data availability varies along the temporal coverage of the dataset: this must be carefully considered when evaluating global or hemispheric information.

For data in high latitude regions or in regions with frequent cloud cover, users should consult uncertainty and quality flags as appropriate for their applications. In addition, for period November 2005 - March 2009 only values over land are available, which has to be taken into account for possible applications of this dataset.

As the uncertainties associated with the dataset are not constant over space and time, users are advised to consider the evolution of their values over the spatial regions and time periods of interest. The highest uncertainty values were found over high latitudes, the Himalayas and the tropical rainforest zone.

📋 Methodology#

Spatial seasonal means and trends are presented and assessed using the XCH\(_4\) v4.5 Level 3 gridded product (Obs4MIPs), which has been generated using the Level 2 EMMA products [4] as input.

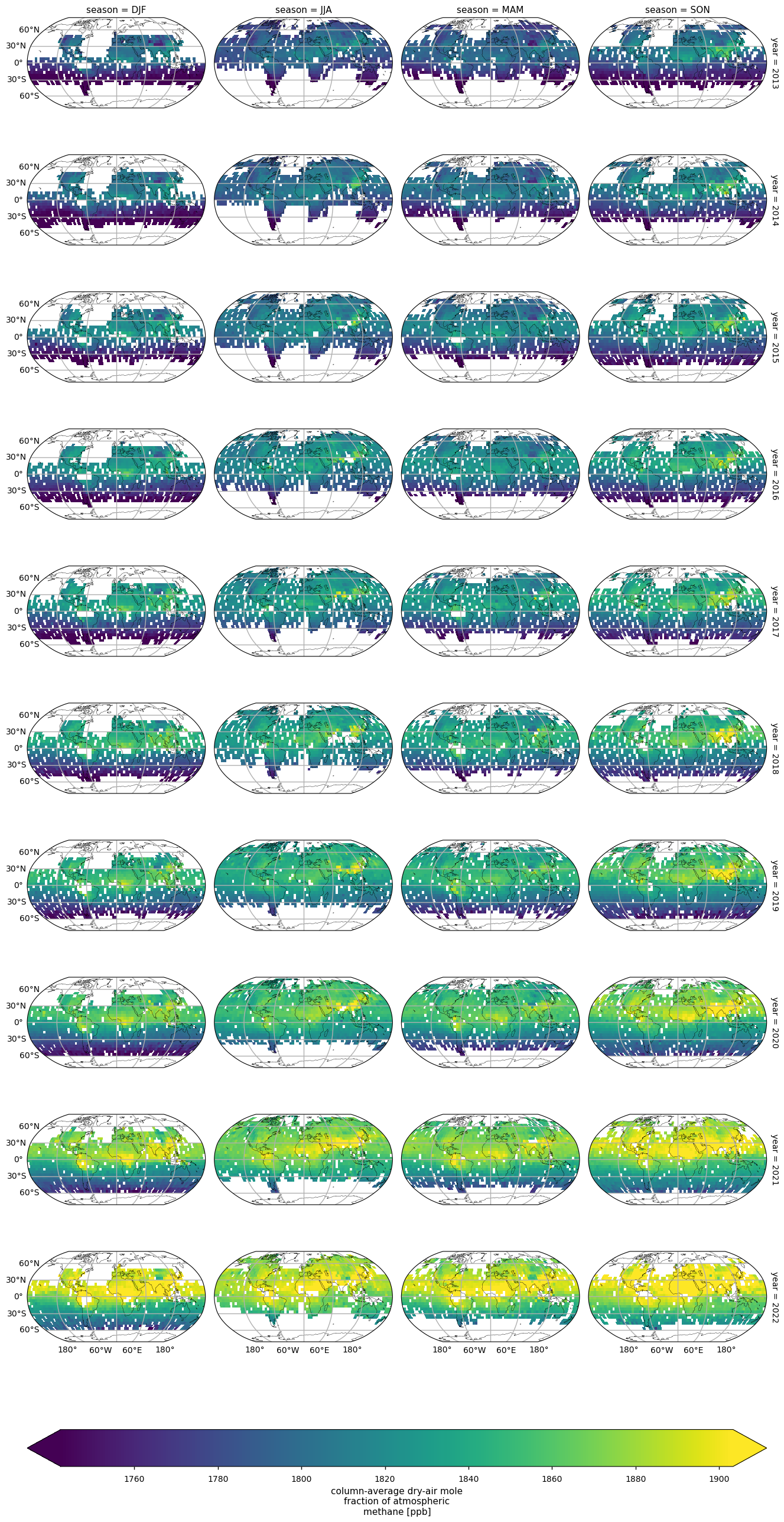

To show how data coverage varies between years and seasons, we have calculated and plotted the average XCH\(_4\) values for the different seasons and years, over the period 2013-2022. This time period has been chosen for the purpose of comparing the calculated trends with the results in [1].

Spatial trends are calculated using a linear model (i.e., Theil-Sen slope estimator) over monthly anomalies (i.e., actual monthly values minus climatological monthly means). This should be treated with caution as the long-term trend of atmospheric CH\(_4\) is not strictly linear. The statistical significance of the trends is assessed using the Mann-Kendall test. Similar to [5], only land pixels are considered to avoid artefacts related to different data availability over oceans (the data product is land only for 2003-2008).

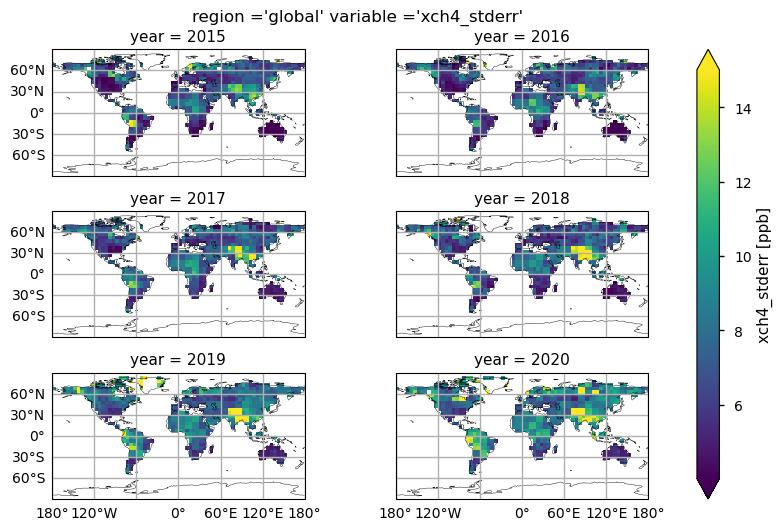

The global maps of the product uncertainties (“xch4_stderr”) for a specific subset of years (2015-2020) are shown, together with the time series of selected XCH\(_4\) variables (“xch4”, “xch4_stderr” and “xch4_nobs”). Please note that this analysis can be extended to one additional variable, not shown here (“xch4_stddev”), or can be customized by the user for specific regions of interest.

The analysis and results are organised in the following steps, which are detailed in the sections below:

1. Choose the data to use and set-up the code

Import all the relevant packages.

Choose the temporal and spatial coverage, land mask, and possible spatial regions for the analysis.

Cache needed functions.

2. Retrieve XCH_4 data (Obs4MIPs)

In this section, we define the data request to CDS.

3. Compute and plot the global variability of seasonal XCH_4

To show how data coverage varies between years and seasons, we have plotted spatial average XCH\(_4\) values for seasons and years. To avoid the production of too many maps, this analysis has been limited to the period 2013-2022. Seasons are defined as December-February (DJF), March-May (MAM), June-August (JJA) and September-November (SON).

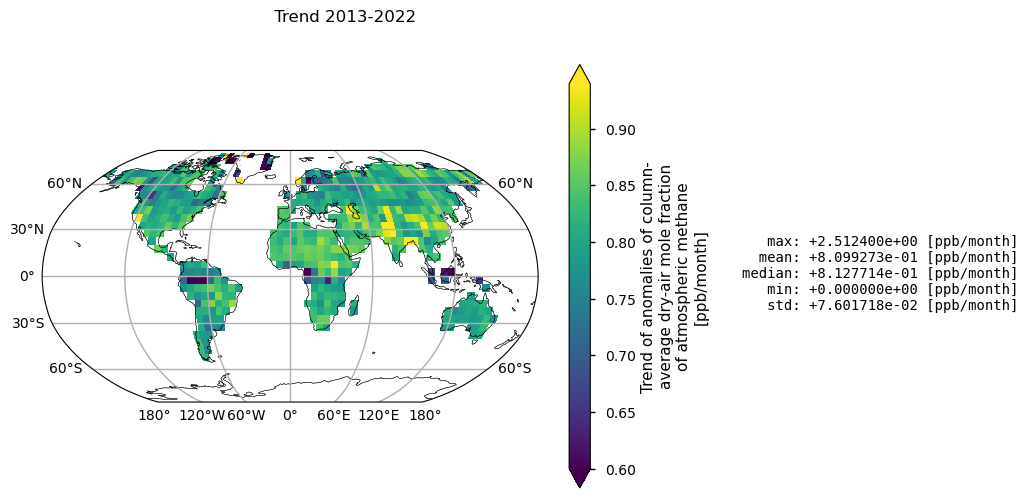

4. Compute global and spatial trends

Trends (over 2013-2022) are calculated using a linear model (i.e., Theil-Sen slope estimator) over monthly anomalies (i.e., actual monthly values minus climatological monthly means). The statistical significance of the trends is assessed using the Mann-Kendall test.

5. Analysis concerning XCH_4 uncertainties

Annual global maps of the XCH\(_4\) uncertainties are presented, for a subset of data (2015-2020).

Global time series of XCH\(_4\), related uncertainties and data availability over the entire data period (2003-2022) are shown.

📈 Analysis and results#

1. Choose the data to use and set-up the code#

Import all relevant packages#

In this section, we import all the relevant packages needed for running the notebook.

Choose temporal and spatial coverage, land mask#

In this section, we define the parameters to be ingested by the code (that can be customized by the user), i.e.:

the temporal period of analysis;

the activation/deactivation of the land masking;

the regions selected for the analysis. Please note that, for this notebook, only the global maps and time series are reported.

The analyses presented in this notebook cover different time periods:

with the aim to compare our results with existing literature [1], the global variability and trend analyses are limited to 2013-2022;

the global plots of the XCH\(_4\) uncertainties refer to the years from 2015 to 2020;

the time series of XCH\(_4\), related uncertainties and data availability cover the entire period of data coverage.

Chache needed functions#

In this section, we cached a list of functions used in the analyses.

The function

get_da(get_da_nomask) is used to subset the data for the defined time period and spatial region by applying (not appliyng) the land mask (as a function of min_land_fraction).The function

convert_unitsrescales XCH\(_4\) mole fraction to parts per billion (ppb).The

seasonal_weighted_meanfunction extracts the regional means over the selected domains. It uses spatial weighting to account for the latitudinal dependence of the grid size in the lon/lat grids used for the reanalysis and for the forecast models. It is used by the functioncompute_seasonal_timeseries_nomaskto provide the seasonal XCH\(_4\) average value for each year (Fig. 1).The function

compute_anomaly_trendsis used to calculate the trend and the related statistical significance.The

compute_monthly_anomaliesfunction is used to derive the monthly XCH\(_4\) anomalies before calculating the trends.The

mask_scale_and_regionalisefunction extracts the XCH\(_4\) data over the selected spatial region. It uses spatial weighting to account for the latitudinal dependence of the grid size in the lon/lat grids used for the reanalysis and for the forecast models. It uses theconvert_unitsfunction for rescaling the values to ppb, and it applies the threshold (if any) on the minimum land fraction.

2. Retrieve XCH\(_4\) data (Obs4MIPs)#

In this section, we define the data request to CDS (data product Obs4MIPs, Level 3, version 4.5, XCH\(_4\)) and download the dataset.

region='global'

0%| | 0/1 [00:00<?, ?it/s]/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/cacholote/extra_encoders.py:297: SerializationWarning: cannot serialize global coordinates {'time_bnds', 'lat_bnds', 'pre_bnds', 'lon_bnds'} because the global attribute 'coordinates' already exists. This may prevent faithful roundtrippingof xarray datasets

obj.to_netcdf(tmpfilename)

100%|██████████| 1/1 [00:00<00:00, 1.45it/s]

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/scipy/stats/_mstats_basic.py:1256: RuntimeWarning: All `x` coordinates are identical.

return stats_theilslopes(y, x, alpha=alpha, method=method)

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/numpy/_core/fromnumeric.py:3860: RuntimeWarning: Mean of empty slice.

return _methods._mean(a, axis=axis, dtype=dtype,

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/numpy/_core/_methods.py:145: RuntimeWarning: invalid value encountered in scalar divide

ret = ret.dtype.type(ret / rcount)

3. Compute and plot the global variability of seasonal XCH\(_4\)#

To show how data coverage varies between years and seasons, in this section we plot the spatial average XCH\(_4\) values for seasons and years. To limit the number of plots produced, this analysis is limited to the period 2013-2022. Seasons are defined as: December-February (DJF), March-May (MAM), June-August (JJA), and September-November (SON). The seasonality of the availability of measurements is evident, with values available in the Southern Hemisphere (SH) mid and high latitudes from September to March, whereas values for the Northern Hemisphere (NH) mid and high latitudes are mostly available from April to August.

0%| | 0/1 [00:00<?, ?it/s]/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/xarray/core/duck_array_ops.py:237: RuntimeWarning: invalid value encountered in cast

return data.astype(dtype, **kwargs)

100%|██████████| 1/1 [00:01<00:00, 1.74s/it]

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/xarray/core/nputils.py:256: RankWarning: Polyfit may be poorly conditioned

warn_on_deficient_rank(rank, x.shape[1])

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/xarray/core/nputils.py:256: RankWarning: Polyfit may be poorly conditioned

warn_on_deficient_rank(rank, x.shape[1])

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/xarray/core/nputils.py:256: RankWarning: Polyfit may be poorly conditioned

warn_on_deficient_rank(rank, x.shape[1])

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/xarray/core/nputils.py:256: RankWarning: Polyfit may be poorly conditioned

warn_on_deficient_rank(rank, x.shape[1])

/data/common/miniforge3/envs/wp5/lib/python3.11/site-packages/xarray/core/nputils.py:256: RankWarning: Polyfit may be poorly conditioned

warn_on_deficient_rank(rank, x.shape[1])

Global annual variability of seasonal XCH\(_4\), over 2013-2022. The different panels represent the individual seasons (column) and years (rows). Seasons are defined as: December-February (DJF), March-May (MAM), June-August (JJA), and September-November (SON).

4. Compute global and spatial trends#

In this section, XCH\(_4\) spatial trends are calculated and statistical significance is assessed for land pixels (“land_fraction > 0.5”). Global statistics for individual pixels are also reported as ppb/month. Please note that the low growth rates observed in the tropics are associated with high statistical errors in trend calculations. This is probably due to the frequent presence of clouds resulting in sparse data availability. Over Greenland, however, high retrieval uncertainty may be a factor due to the high surface albedo.

For 2013-2022, the mean global XCH\(_4\) growth rate is 9.71 \(\pm\) 2.21 ppb/yr (\(\pm\) standard error of the trend calculation). Taking into account the associated uncertainties, the different calculation methods and the different vertical representativeness of satellite observations with respect to near-surface measurements, this value is reasonably consistent with the mean absolute increase of 10.20 ppb/yr over the past 10 years, as reported by the WMO’s global in-situ observations [1].

100%|██████████| 1/1 [00:03<00:00, 3.63s/it]

Trends (upper map) and related standard error (bottom map) of XCH\(_4\) given in ppb/month calculated by using linear model (Theil-Sen) and Mann-Kendall test for statistical significance. Over each pixel, trends are calculated for monthly anomalies over land (“land_fraction > 0.5”). In the upper map, any shaded areas indicate pixels that did not pass the Mann-Kendall significance test. Global statistics for individual pixels are shown on the right of the plot.

5. Analysis concerning XCH\(_4\) uncertainties#

Global maps of XCH\(_4\) uncertainties#

In this section, we show the multi-year (for the 2015-2020 period) global maps of the XCH\(_4\) reported uncertainties, i.e., the “xch4_stderr” variable. This parameter is defined as the standard error of the average including single sounding noise and potential seasonal and regional biases [4]. The yearly averages are calculated by averaging the monthly values provided by the considered dataset. Throughout all the selected years some features emerge, with higher uncertainties mainly over the Himalayas, South Asia, high latitudes, and the tropical rainforest zone. This is mainly due to the sparseness in sampling because of frequent cloud cover, as well as large solar zenith angles in high latitudes, which are a challenge for accurate XCH\(_4\) retrievals [5]. It is worth noting that regions characterised by large uncertainties were also affected by spatial trends that deviated from the global average (see the previous figure). Users should therefore exercise caution when deriving long-term trends for regions affected by high uncertainty.

Multi-year global distribution of XCH\(_4\) standard error (from 2015 to 2020), derived from the XCH4_OBS4MIPS dataset (version 4.5). The title indicates the spatial region and the selected variable.

Time series analysis for the different variables#

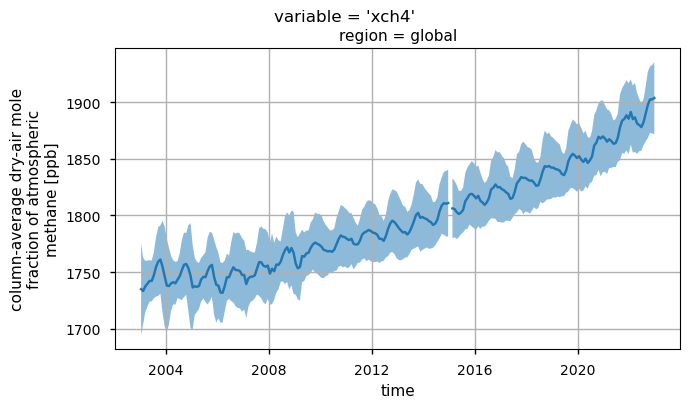

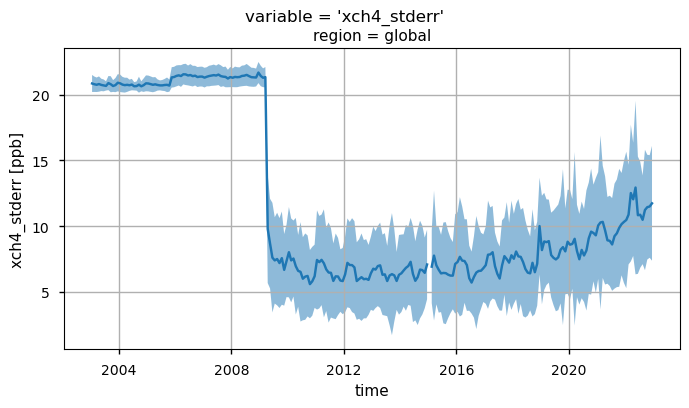

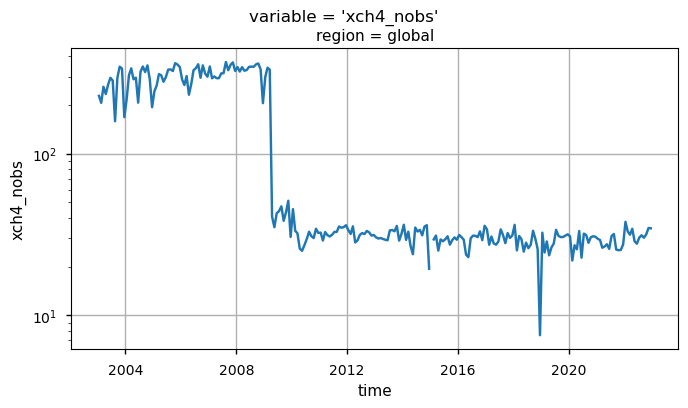

In this section, we show the time series for three XCH\(_4\) variables (i.e., “xch4”, “xch4_stderr”, and “xch4_nobs”), considering the entire dataset. For each variable, each plot displays the monthly global spatial average, along with the corresponding monthly standard deviation (except for “xch4_nobs”). This analysis would provide the user with:

the global time series of XCH\(_4\) values (“xch4”), highlighting the overall positive trend;

the change over time of the uncertainty associated to the XCH\(_4\) values (“xch4_stderr”);

the time series of “xch4_nobs”, which denotes the number of individual XCH\(_4\) Level 2 observations used to compute the monthly Level 3 data.

The XCH\(_4\) values have been nearly constant until 2007, then a positive trend has been detected. This is likely due to a combination of increasing natural (e.g., wetlands) and anthropogenic (e.g., fossil-fuel related) emissions, and possible decreasing sinks, although this is currently under investigation (see [3] and references therein).

By looking at the uncertainties (“xch4_stderr”), the most evident feature is the sharp decrease after 2009, likely due to the introduction of algorithms based on GOSAT observations used to calculate the median XCH\(_4\) data [6]. A further increase in the uncertainties is observed from 2020 onwards, and this is probably linked to the introduction of GOSAT-2 measurements [6].

The temporal variability of “xch4_nobs” traces the changes over time of the input data availability, and the number of used algorithms to obtain the merged Level 2 data products, from which the Obs4MIPs product is derived (please note that the standard deviations have not been reported for “xch4_nobs” to increase the plot readability). The first time period (up to April 2009) is characterized by a significant number of observations by the SCIAMACHY WFMD product only. The following period (up to 2022) reflects the reduced number of soundings provided by SCIAMACHY with the contributions from GOSAT and GOSAT-2 (from January 2019). For details about the different Level 2 products used as input for the generation of the Level 3 XCH\(_4\) data, see [5] and [6].

Please note that this analysis can be customized by the user to limit it to specific regions, or to include one additional variable (not shown here): “xch4_stddev”, which represents the standard deviation of the XCH\(_4\) Level 2 observations within each grid box.

Global monthly time series of XCH\(_4\) (top panel) and their associated uncertainties (middle panel) for the entire dataset. The blue lines represent the monthly spatial average, while the shaded areas indicate \(\pm\)1 standard deviation. The bottom panel shows the time series of monthly spatial average of the number of individual XCH\(_4\) Level 2 observations used to compute Obs4MIPs (Level 3) data. The main titles indicate the variable shown, while the subtitles specify the corresponding region.

ℹ️ If you want to know more#

Key resources#

The CDS catalogue entries for the data used were:

Methane data from 2002 to present derived from satellite observations: https://cds.climate.copernicus.eu/datasets/satellite-methane?tab=overview

Code libraries used:

C3S EQC custom functions,

c3s_eqc_automatic_quality_control, prepared by B-Open

Users interested in obtaining updated figures for methane growth rates and global trends are directed to official C3S sources for precise reporting: [7], [8].

Users interested in near-real time detection of hot-spot locations for methane emissions can consider to use the CAMS Methane Hotspot Explorer: https://atmosphere.copernicus.eu/ghg-services/cams-methane-hotspot-explorer?utm_source=press&utm_medium=referral&utm_campaign=CH4-app-2025

References#

[1] World Meteorological Organization (2023). WMO Greenhouse Gas Bulletin, No. 19, ISSN 2078-0796.

[2] West, J. J., Fiore, A. M., Horowitz, L. W., and Mauzerall, D. L. (2006). Global health benefits of mitigating ozone pollution with methane emission controls. Proceedings of the National Academy of Sciences USA, 103, 3988–3993.

[3] Saunois, M., Martinez, A., Poulter, B., Zhang, Z., Raymond, P. A., Regnier, P., Canadell, J. G., Jackson, R. B., Patra, P. K., Bousquet, P., Ciais, P., Dlugokencky, E. J., Lan, X., Allen, G. H., Bastviken, D., Beerling, D. J., Belikov, D. A., Blake, D. R., Castaldi, S., Crippa, M., Deemer, B. R., Dennison, F., Etiope, G., Gedney, N., Höglund-Isaksson, L., Holgerson, M. A., Hopcroft, P. O., Hugelius, G., Ito, A., Jain, A. K., Janardanan, R., Johnson, M. S., Kleinen, T., Krummel, P. B., Lauerwald, R., Li, T., Liu, X., McDonald, K. C., Melton, J. R., Mühle, J., Müller, J., Murguia-Flores, F., Niwa, Y., Noce, S., Pan, S., Parker, R. J., Peng, C., Ramonet, M., Riley, W. J., Rocher-Ros, G., Rosentreter, J. A., Sasakawa, M., Segers, A., Smith, S. J., Stanley, E. H., Thanwerdas, J., Tian, H., Tsuruta, A., Tubiello, F. N., Weber, T. S., van der Werf, G. R., Worthy, D. E. J., Xi, Y., Yoshida, Y., Zhang, W., Zheng, B., Zhu, Q., Zhu, Q., and Zhuang, Q. (2025). Global Methane Budget 2000–2020, Earth System Science Data, 17, 1873–1958.

[4] Buchwitz, M. (2024). Product User Guide and Specification (PUGS) – Main document for Greenhouse Gas (GHG: CO\(_2\) & CH\(_4\)) data set CDR7 (01.2003-12.2022), C3S project 2021/C3S2_312a_Lot2_DLR/SC1, v7.3.

[5] Reuter, M., Buchwitz, M., Schneising, O., Noël, S., Bovensmann, H., Burrows, J. P., Boesch, H., Di Noia, A., Anand, J., Parker, R. J., Somkuti, P., Wu, L., Hasekamp, O. P., Aben, I., Kuze, A., Suto, H., Shiomi, K., Yoshida, Y., Morino, I., Crisp, D., O’Dell, C. W., Notholt, J., Petri, C., Warneke, T., Velazco, V. A., Deutscher, N. M., Griffith, D. W. T., Kivi, R., Pollard, D. F., Hase, F., Sussmann, R., Té, Y. V., Strong, K., Roche, S., Sha, M. K., De Mazière, M., Feist, D. G., Iraci, L. T., Roehl, C. M., Retscher, C., and Schepers, D. (2020). Ensemble-based satellite-derived carbon dioxide and methane column-averaged dry-air mole fraction data sets (2003–2018) for carbon and climate applications, Atmospheric Measurement Techniques, 13, 789–819.

[6] Reuter,M. and Buchwitz, M. (2024). Algorithm Theoretical Basis Document (ATBD) – ANNEX D for products XCO2_EMMA, XCH4_EMMA, XCO2_OBS4MIPS, XCH4_OBS4MIPS (v4.5, CDR7, 2003-2022), C3S project 2021/C3S2_312a_Lot2_DLR/SC1, v7.1b.

[7] Copernicus Climate Change Service (C3S) and World Meteorological Organization (WMO). (2025). European State of the Climate 2024. https://doi.org/10.24381/14j9-s541

[8] Copernicus Climate Change Service (C3S). (2025). Global Climate Highlights 2024.