Tutorial on how to import, subset, aggregate and export CAMS Data#

This tutorial provides practical examples that demonstrate how to download, read into Xarray, subset, aggregate and export data from the Atmosphere Data Store (ADS) of the Copernicus Atmosphere Monitoring Service (CAMS).

| Run the tutorial via free cloud platforms: |

|

|

|

|---|

Install ADS API#

We will need to install the Application Programming Interface (API) of the Atmosphere Data Store (ADS). This will allow us to programmatically download data.

Note

Note the exclamation mark in the line of code below. This means the code will run as a shell (as opposed to a notebook) command.

!pip install cdsapi

Import libraries#

Here we import a number of publicly available Python packages, needed for this tutorial.

# CDS API

import cdsapi

# Library to extract data

from zipfile import ZipFile

# Libraries to read and process arrays

import numpy as np

import xarray as xr

import pandas as pd

# Disable warnings for data download via API

import urllib3

urllib3.disable_warnings()

Access data#

To access data from the ADS, you will need first to register (if you have not already done so), by visiting https://ads.atmosphere.copernicus.eu/ and selecting “Login/Register”

To obtain data programmatically from the ADS, you will need an API Key. This can be found in the page https://ads.atmosphere.copernicus.eu/how-to-api. Here your key will appear automatically in the black window, assuming you have already registered and logged into the ADS. Your API key is the entire string of characters that appears after key:

Now copy your API key into the code cell below, replacing ####### with your key.

URL = 'https://ads.atmosphere.copernicus.eu/api'

# Replace the hashtags with your key:

KEY = '#############################'

Here we specify a data directory into which we will download our data and all output files that we will generate:

DATADIR = '.'

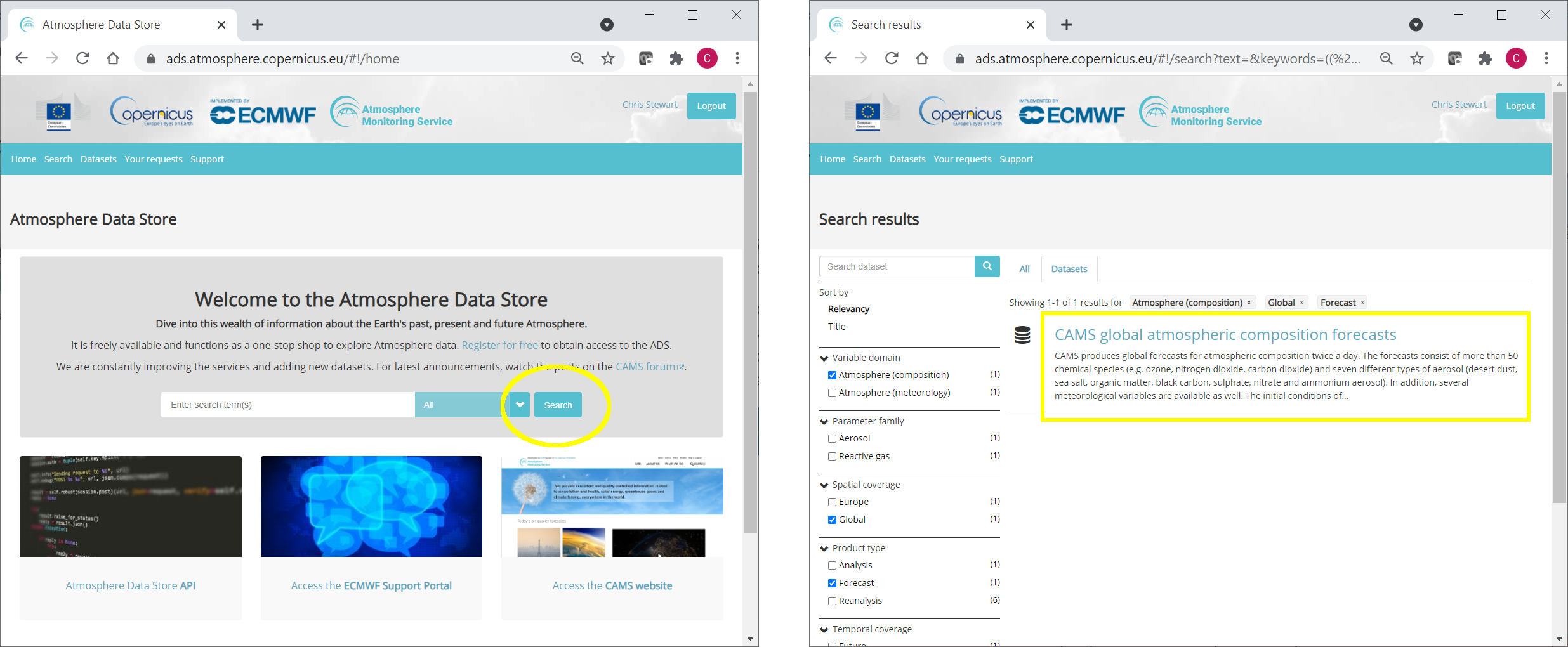

The data we will download and inspect in this tutorial comes from the CAMS Global Atmospheric Composition Forecast dataset. This can be found in the Atmosphere Data Store (ADS) by scrolling through the datasets, or applying search filters as illustrated here:

Having selected the correct dataset, we now need to specify what product type, variables, temporal and geographic coverage we are interested in. These can all be selected in the “Download data” tab. In this tab a form appears in which we will select the following parameters to download:

Variables (Single level): Dust aerosol optical depth at 550nm, Organic matter aerosol optical depth at 550nm, Total aerosol optical depth at 550nm

Date: Start: 2021-08-01, End: 2021-08-08

Time: 00:00, 12:00 (default)

Leadtime hour: 0 (only analysis)

Type: Forecast (default)

Area: Restricted area: North: 90, East: 180, South: 0, West: -180

Format: Zipped netCDF (experimental)

At the end of the download form, select “Show API request”. This will reveal a block of code, which you can simply copy and paste into a cell of your Jupyter Notebook (see cell below)…

Note

Before running this code, ensure that you have accepted the terms and conditions. This is something you only need to do once for each CAMS dataset. You will find the option to do this by selecting the dataset in the ADS, then scrolling to the end of the Download data tab.

dataset = "cams-global-atmospheric-composition-forecasts"

request = {

'variable': ['dust_aerosol_optical_depth_550nm', 'organic_matter_aerosol_optical_depth_550nm', 'total_aerosol_optical_depth_550nm'],

'date': ['2021-08-01/2021-08-08'],

'time': ['00:00', '12:00'],

'leadtime_hour': ['0'],

'type': ['forecast'],

'data_format': 'netcdf_zip',

'area': [90, -180, 0, 180]

}

client = cdsapi.Client(url=URL, key=KEY)

client.retrieve(dataset, request).download(f'{DATADIR}/2021-08_AOD.zip')

2024-09-12 13:09:00,125 INFO Request ID is cc9448c4-2415-4c46-a73f-316d98350daf

2024-09-12 13:09:00,184 INFO status has been updated to accepted

2024-09-12 13:09:01,721 INFO status has been updated to running

2024-09-12 13:11:50,751 INFO Creating download object as zip with files:

['data_sfc.nc']

2024-09-12 13:11:50,752 INFO status has been updated to successful

'./2021-08_AOD.zip'

Read data#

Now that we have downloaded the data, we can read, plot and analyse it…

We have requested the data in NetCDF format. This is a commonly used format for gridded (array-based) scientific data.

To read and process this data we will make use of the Xarray library. Xarray is an open source project and Python package that makes working with labelled multi-dimensional arrays simple and efficient. We will read the data from our NetCDF file into an Xarray “dataset”.

First we extract the downloaded zip file:

# Create a ZipFile Object and load zip file in it

with ZipFile(f'{DATADIR}/2021-08_AOD.zip', 'r') as zipObj:

# Extract all the contents of zip file into a directory

zipObj.extractall(path=f'{DATADIR}/2021-08_AOD/')

For convenience, we create a variable with the name of our downloaded file:

fn = f'{DATADIR}/2021-08_AOD/data_sfc.nc'

Now we can read the data into an Xarray dataset:

# Create Xarray Dataset

ds = xr.open_dataset(fn)

Let’s see how this looks by querying our newly created Xarray dataset …

ds

<xarray.Dataset> Size: 39MB

Dimensions: (forecast_period: 1, forecast_reference_time: 16,

latitude: 226, longitude: 900)

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

* forecast_reference_time (forecast_reference_time) datetime64[ns] 128B 20...

* latitude (latitude) float64 2kB 90.0 89.6 89.2 ... 0.4 0.0

* longitude (longitude) float64 7kB -180.0 -179.6 ... 179.6

valid_time (forecast_reference_time, forecast_period) datetime64[ns] 128B ...

Data variables:

duaod550 (forecast_period, forecast_reference_time, latitude, longitude) float32 13MB ...

omaod550 (forecast_period, forecast_reference_time, latitude, longitude) float32 13MB ...

aod550 (forecast_period, forecast_reference_time, latitude, longitude) float32 13MB ...

Attributes:

GRIB_centre: ecmf

GRIB_centreDescription: European Centre for Medium-Range Weather Forecasts

GRIB_subCentre: 0

Conventions: CF-1.7

institution: European Centre for Medium-Range Weather Forecasts

history: 2024-09-12T13:11 GRIB to CDM+CF via cfgrib-0.9.1...We see that the dataset has three variables. Selecting the “show/hide attributes” icons reveals their names: “omaod550” is “Organic Matter Aerosol Optical Depth at 550nm”, “aod550” is “Total Aerosol Optical Depth at 550nm” and “duaod550” is “Dust Aerosol Optical Depth at 550nm”. The dataset also has four coordinates of longitude, latitude, forecast_reference_time and forecast_period.

We will now look more carefully at the “Total Aerosol Optical Depth at 550nm” dataset.

While an Xarray dataset may contain multiple variables, an Xarray data array holds a single multi-dimensional variable and its coordinates. To make the processing of the aod550 data easier, we convert in into an Xarray data array.

# Create Xarray Data Array

da = ds['aod550']

da

<xarray.DataArray 'aod550' (forecast_period: 1, forecast_reference_time: 16,

latitude: 226, longitude: 900)> Size: 13MB

[3254400 values with dtype=float32]

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

* forecast_reference_time (forecast_reference_time) datetime64[ns] 128B 20...

* latitude (latitude) float64 2kB 90.0 89.6 89.2 ... 0.4 0.0

* longitude (longitude) float64 7kB -180.0 -179.6 ... 179.6

valid_time (forecast_reference_time, forecast_period) datetime64[ns] 128B ...

Attributes: (12/33)

GRIB_paramId: 210207

GRIB_dataType: fc

GRIB_numberOfPoints: 203400

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_units: ~

long_name: Total Aerosol Optical Depth at ...

units: ~

standard_name: unknown

GRIB_number: 0

GRIB_surface: 0.0Subset data#

This section provides some selected examples of ways in which parts of a dataset can be extracted. More comprehensive documentation on how to index and select data is available here: https://docs.xarray.dev/en/stable/user-guide/indexing.html.

Temporal subset#

By inspecting the array, we notice that the second of the four dimensions is time. If we wish to select only one time step, the easiest way to do this is to use positional indexing. The code below creates a Data Array of only the first time step.

time0 = da[:,0,:,:]

time0

<xarray.DataArray 'aod550' (forecast_period: 1, latitude: 226, longitude: 900)> Size: 814kB

[203400 values with dtype=float32]

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

forecast_reference_time datetime64[ns] 8B 2021-08-01

* latitude (latitude) float64 2kB 90.0 89.6 89.2 ... 0.4 0.0

* longitude (longitude) float64 7kB -180.0 -179.6 ... 179.6

valid_time (forecast_period) datetime64[ns] 8B ...

Attributes: (12/33)

GRIB_paramId: 210207

GRIB_dataType: fc

GRIB_numberOfPoints: 203400

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_units: ~

long_name: Total Aerosol Optical Depth at ...

units: ~

standard_name: unknown

GRIB_number: 0

GRIB_surface: 0.0And this creates a Data Array of the first 5 time steps:

time_5steps = da[:,0:5,:,:]

time_5steps

<xarray.DataArray 'aod550' (forecast_period: 1, forecast_reference_time: 5,

latitude: 226, longitude: 900)> Size: 4MB

[1017000 values with dtype=float32]

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

* forecast_reference_time (forecast_reference_time) datetime64[ns] 40B 202...

* latitude (latitude) float64 2kB 90.0 89.6 89.2 ... 0.4 0.0

* longitude (longitude) float64 7kB -180.0 -179.6 ... 179.6

valid_time (forecast_reference_time, forecast_period) datetime64[ns] 40B ...

Attributes: (12/33)

GRIB_paramId: 210207

GRIB_dataType: fc

GRIB_numberOfPoints: 203400

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_units: ~

long_name: Total Aerosol Optical Depth at ...

units: ~

standard_name: unknown

GRIB_number: 0

GRIB_surface: 0.0Another way to select data is to use the .sel() method of xarray. The example below selects all data from the first of August.

firstAug = da.sel(forecast_reference_time='2021-08-01')

We can also select a time range using label based indexing, with the loc attribute:

period = da.loc[:,"2021-08-01":"2021-08-03",:,:]

period

<xarray.DataArray 'aod550' (forecast_period: 1, forecast_reference_time: 6,

latitude: 226, longitude: 900)> Size: 5MB

[1220400 values with dtype=float32]

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

* forecast_reference_time (forecast_reference_time) datetime64[ns] 48B 202...

* latitude (latitude) float64 2kB 90.0 89.6 89.2 ... 0.4 0.0

* longitude (longitude) float64 7kB -180.0 -179.6 ... 179.6

valid_time (forecast_reference_time, forecast_period) datetime64[ns] 48B ...

Attributes: (12/33)

GRIB_paramId: 210207

GRIB_dataType: fc

GRIB_numberOfPoints: 203400

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_units: ~

long_name: Total Aerosol Optical Depth at ...

units: ~

standard_name: unknown

GRIB_number: 0

GRIB_surface: 0.0Geographic subset#

Geographical subsetting works in much the same way as temporal subsetting, with the difference that instead of one dimension we now have two (or even three if we inlcude altitude).

Select nearest grid cell#

In some cases, we may want to find the geographic grid cell that is situated nearest to a particular location of interest, such as a city. In this case we can use .sel(), and make use of the method keyword argument, which enables nearest neighbor (inexact) lookups. In the example below, we look for the geographic grid cell nearest to Paris.

paris_lat = 48.9

paris_lon = 2.4

paris = da.sel(latitude=paris_lat, longitude=paris_lon, method='nearest')

paris

<xarray.DataArray 'aod550' (forecast_period: 1, forecast_reference_time: 16)> Size: 64B

[16 values with dtype=float32]

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

* forecast_reference_time (forecast_reference_time) datetime64[ns] 128B 20...

latitude float64 8B 48.8

longitude float64 8B 2.4

valid_time (forecast_reference_time, forecast_period) datetime64[ns] 128B ...

Attributes: (12/33)

GRIB_paramId: 210207

GRIB_dataType: fc

GRIB_numberOfPoints: 203400

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_units: ~

long_name: Total Aerosol Optical Depth at ...

units: ~

standard_name: unknown

GRIB_number: 0

GRIB_surface: 0.0Regional subset#

Often we may wish to select a regional subset. Note that you can specify a region of interest in the ADS prior to downloading data. This is more efficient as it reduces the data volume. However, there may be cases when you wish to select a regional subset after download. One way to do this is with the .where() function.

In the previous examples, we have used methods that return a subset of the original data. By default .where() maintains the original size of the data, with selected elements masked (which become “not a number”, or nan). Use of the option drop=True clips coordinate elements that are fully masked.

The example below uses .where() to select a geographic subset from 30 to 60 degrees latitude. We could also specify longitudinal boundaries, by simply adding further conditions.

mid_lat = da.where((da.latitude > 30.) & (da.latitude < 60.), drop=True)

Aggregate data#

Another common task is to aggregate data. This may include reducing hourly data to daily means, minimum, maximum, or other statistical properties. We may wish to apply over one or more dimensions, such as averaging over all latitudes and longitudes to obtain one global value.

Temporal aggregation#

To aggregate over one or more dimensions, we can apply one of a number of methods to the original dataset, such as .mean(), .min(), .max(), .median() and others (see https://docs.xarray.dev/en/stable/api.html#id6 for the full list).

The example below takes the mean of all time steps. The keep_attrs parameter is optional. If set to True it will keep the original attributes of the Data Array (i.e. description of variable, units, etc). If set to false, the attributes will be stripped.

time_mean = da.mean(dim="forecast_reference_time", keep_attrs=True)

time_mean

<xarray.DataArray 'aod550' (forecast_period: 1, latitude: 226, longitude: 900)> Size: 814kB

array([[[0.50736654, 0.50736654, 0.50736654, ..., 0.50736654,

0.50736654, 0.50736654],

[0.46713126, 0.46682942, 0.466529 , ..., 0.46804398,

0.46773863, 0.46743435],

[0.43601978, 0.43541682, 0.4348175 , ..., 0.43785065,

0.43723673, 0.43662643],

...,

[0.07072866, 0.06997345, 0.06995939, ..., 0.0722326 ,

0.07046068, 0.0704512 ],

[0.07082945, 0.06887189, 0.06728195, ..., 0.07400212,

0.07277834, 0.0719097 ],

[0.06977722, 0.06664259, 0.06410388, ..., 0.07571781,

0.07463253, 0.07250822]]], dtype=float32)

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

* latitude (latitude) float64 2kB 90.0 89.6 89.2 88.8 ... 0.8 0.4 0.0

* longitude (longitude) float64 7kB -180.0 -179.6 ... 179.2 179.6

Attributes: (12/33)

GRIB_paramId: 210207

GRIB_dataType: fc

GRIB_numberOfPoints: 203400

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_units: ~

long_name: Total Aerosol Optical Depth at ...

units: ~

standard_name: unknown

GRIB_number: 0

GRIB_surface: 0.0Instead of reducing an entire dimension to one value, we may wish to reduce the frequency within a dimension. For example, we can reduce hourly data to daily max values. One way to do this is using groupby() combined with the .max() aggregate function, as shown below:

daily_max = da.groupby('forecast_reference_time.day').max(keep_attrs=True)

daily_max

<xarray.DataArray 'aod550' (forecast_period: 1, day: 8, latitude: 226,

longitude: 900)> Size: 7MB

array([[[[0.26269364, 0.26269364, 0.26269364, ..., 0.26269364,

0.26269364, 0.26269364],

[0.22594188, 0.22530101, 0.22465824, ..., 0.22786163,

0.22722267, 0.22658275],

[0.24543594, 0.2441523 , 0.2428677 , ..., 0.24927448,

0.24799655, 0.24671672],

...,

[0.0983517 , 0.09476589, 0.08715175, ..., 0.10342334,

0.10067485, 0.09867404],

[0.12430927, 0.11521217, 0.10060379, ..., 0.11608146,

0.11144374, 0.11907455],

[0.11796734, 0.11791679, 0.10847542, ..., 0.11883758,

0.11546157, 0.11625931]],

[[2.568343 , 2.568343 , 2.568343 , ..., 2.568343 ,

2.568343 , 2.568343 ],

[2.6602957 , 2.659777 , 2.6592658 , ..., 2.6618922 ,

2.6613533 , 2.6608207 ],

[2.514293 , 2.5132563 , 2.512235 , ..., 2.5174892 ,

2.5164092 , 2.515344 ],

...

[0.09576356, 0.09529388, 0.09411991, ..., 0.08724582,

0.09197176, 0.09367597],

[0.0994662 , 0.09842908, 0.09573305, ..., 0.08806264,

0.09281051, 0.09561813],

[0.09634721, 0.09436309, 0.09271324, ..., 0.08776033,

0.09257209, 0.09482419]],

[[0.09904701, 0.09904701, 0.09904701, ..., 0.09904701,

0.09904701, 0.09904701],

[0.1035803 , 0.10347682, 0.10337335, ..., 0.10388643,

0.10378486, 0.10368282],

[0.11281282, 0.11260682, 0.11240035, ..., 0.11342746,

0.11322337, 0.11301833],

...,

[0.0636552 , 0.07602721, 0.08213645, ..., 0.0609377 ,

0.05778098, 0.05757457],

[0.05529577, 0.06107312, 0.06196576, ..., 0.06288081,

0.05802709, 0.05498534],

[0.0544017 , 0.05245811, 0.04827911, ..., 0.0706194 ,

0.06566173, 0.05943328]]]], dtype=float32)

Coordinates:

* forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

* latitude (latitude) float64 2kB 90.0 89.6 89.2 88.8 ... 0.8 0.4 0.0

* longitude (longitude) float64 7kB -180.0 -179.6 ... 179.2 179.6

* day (day) int64 64B 1 2 3 4 5 6 7 8

Attributes: (12/33)

GRIB_paramId: 210207

GRIB_dataType: fc

GRIB_numberOfPoints: 203400

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_units: ~

long_name: Total Aerosol Optical Depth at ...

units: ~

standard_name: unknown

GRIB_number: 0

GRIB_surface: 0.0Spatial aggregation#

We can apply the same principles to spatial aggregation. An important consideration when aggregating over latitude is the variation in area that the gridded data represents. To account for this, we would need to calculate the area of each grid cell. A simpler solution however, is to use the cosine of the latitude as a proxy.

The example below demonstrates how to calculate a spatial average of total AOD, applied to the temporal mean we previously calculated, to obtain a single mean value of total AOD averaged in space and time.

We first calculate the cosine of the latitudes, having converted these from degrees to radians. We then apply these to the Data Array as weights.

weights = np.cos(np.deg2rad(time_mean.latitude))

weights.name = "weights"

time_mean_weighted = time_mean.weighted(weights)

Now we apply the aggregate function .mean() to obtain a weighted average.

Total_AOD = time_mean_weighted.mean(["longitude", "latitude"])

Total_AOD

<xarray.DataArray 'aod550' (forecast_period: 1)> Size: 8B array([0.2940043]) Coordinates: * forecast_period (forecast_period) timedelta64[ns] 8B 00:00:00

Export data#

This section includes a few examples of how to export data.

Export data as NetCDF#

The code below provides a simple example of how to export data to NetCDF.

paris.to_netcdf(f'{DATADIR}/2021-08_AOD_Paris.nc')

Export data as CSV#

You may wish to export this data into a format which enables processing with other tools. A commonly used file format is CSV, or “Comma Separated Values”, which can be used in software such as Microsoft Excel. This section explains how to export data from an xarray object into CSV. Xarray does not have a function to export directly into CSV, so instead we use the Pandas library. We will read the data into a Pandas Data Frame, then write to a CSV file using a dedicated Pandas function.

df = paris.to_dataframe()

df

| latitude | longitude | valid_time | aod550 | ||

|---|---|---|---|---|---|

| forecast_period | forecast_reference_time | ||||

| 0 days | 2021-08-01 00:00:00 | 48.8 | 2.4 | 2021-08-01 00:00:00 | 0.171175 |

| 2021-08-01 12:00:00 | 48.8 | 2.4 | 2021-08-01 12:00:00 | 0.264477 | |

| 2021-08-02 00:00:00 | 48.8 | 2.4 | 2021-08-02 00:00:00 | 0.174434 | |

| 2021-08-02 12:00:00 | 48.8 | 2.4 | 2021-08-02 12:00:00 | 0.311839 | |

| 2021-08-03 00:00:00 | 48.8 | 2.4 | 2021-08-03 00:00:00 | 0.370461 | |

| 2021-08-03 12:00:00 | 48.8 | 2.4 | 2021-08-03 12:00:00 | 0.511861 | |

| 2021-08-04 00:00:00 | 48.8 | 2.4 | 2021-08-04 00:00:00 | 0.215171 | |

| 2021-08-04 12:00:00 | 48.8 | 2.4 | 2021-08-04 12:00:00 | 0.155513 | |

| 2021-08-05 00:00:00 | 48.8 | 2.4 | 2021-08-05 00:00:00 | 0.185829 | |

| 2021-08-05 12:00:00 | 48.8 | 2.4 | 2021-08-05 12:00:00 | 0.261576 | |

| 2021-08-06 00:00:00 | 48.8 | 2.4 | 2021-08-06 00:00:00 | 0.210918 | |

| 2021-08-06 12:00:00 | 48.8 | 2.4 | 2021-08-06 12:00:00 | 0.404165 | |

| 2021-08-07 00:00:00 | 48.8 | 2.4 | 2021-08-07 00:00:00 | 0.201150 | |

| 2021-08-07 12:00:00 | 48.8 | 2.4 | 2021-08-07 12:00:00 | 0.322430 | |

| 2021-08-08 00:00:00 | 48.8 | 2.4 | 2021-08-08 00:00:00 | 0.142454 | |

| 2021-08-08 12:00:00 | 48.8 | 2.4 | 2021-08-08 12:00:00 | 0.298323 |

df.to_csv(f'{DATADIR}/2021-08_AOD_Paris.csv')