Visualising the Fire Weather Index (FWI) for the European State of the Climate (ESOTC) assessment#

Copernicus Climate Change Service, Climate Data Store, (2019): Fire danger indices historical data from the Copernicus Emergency Management Service. Copernicus Climate Change Service (C3S) Climate Data Store (CDS). DOI: 10.24381/cds.0e89c522 (Accessed on DD-MMM-YYYY)

Wildfires develop where there is available combustible vegetation and an ignition source, such as from lightning strikes or from human activity. Once ignited, there are several elements that influence fire development. For example, the type and structure of vegetation and its moisture content affect the duration and intensity of a fire event and the topography and wind affect the direction and speed of spread.

To understand how the flammability of a particular area changes in response to weather conditions, and to assess the potential spread and intensity of a fire, fire danger indices are used, such as the Fire Weather Index (FWI), which express a measure of landscape flammability. Although they do not provide information on actual fires, as they do not consider the ignition, they have been shown to correlate with fire activity expressed in terms of burnt area. Indices such as FWI are calculated from daily temperature, relative humidity, wind speed and precipitation, and take into account the moisture content of potential fuels. Their values also reflect the weather conditions over the preceding days to months.

This notebook demonstrates how to:

Access, download and open the CEMS FWI data from the CDS

Extract the summer months

Plot maps of the FWI, both globally and for selected sub-regions

| Run the tutorial via free cloud platforms: |

|

|

|

|---|

Importing all the necessary python libraries#

Before we begin, we must prepare our enviroment. This includes installing the Application Programming Interface (API) of the CDS, and importing the various python libraries we will need.

The following code cells install and import the cdsapi and several other packages used in this notebook.

# If necessary install the required packages.

!pip -q install xarray

!pip -q install 'cdsapi>=0.7.0'

!pip -q install matplotlib cartopy

import os

# CDS API

import cdsapi

# working with multidimensional arrays

import xarray as xr

import numpy as np

# libraries for plotting and visualising the data

import cartopy as cart

import matplotlib.pyplot as plt

import cartopy.crs as ccrs

import matplotlib.colors as colors

import datetime as dt

1. Search and download the data#

Enter your CDS API key#

We will request data from the Climate Data Store (CDS) programmatically with the help of the CDS API.

If you have not set up your CDS API credentials with a ~/.cdsapirc file, it is possible to provide the credentials when initialising the cdsapi.Client. To do this we must define the two variables needed to establish a connection: URL and KEY . The URL for the cds api is common and you do not need to modify that field. The KEY is string of characters made up of your your personal User ID and CDS API key. To obtain these, first register or login to the CDS (https://cds.climate.copernicus.eu), then visit https://cds.climate.copernicus.eu/how-to-api and copy the string of characters listed after “key:”. Replace the ######### below with this string.

NOTE: If you have set up your cdsapi key using a ~/.cdsapirc you do not need to add your key below.

cdsapi_kwargs = ''

if 1==2:

if os.path.isfile("~/.cdsapirc"):

cdsapi_kwargs = ''

else:

URL = 'https://ewds.climate.copernicus.eu/api'

KEY = '###################################'

cdsapi_kwargs = {

'url': URL,

'key': KEY,

}

Here we specify a data directory in which we will download our data and all output files that we will generate:

# Only need to be run once

DATADIR = './data_dir'

os.makedirs(DATADIR, exist_ok=True)

Find the fire danger indices historical data#

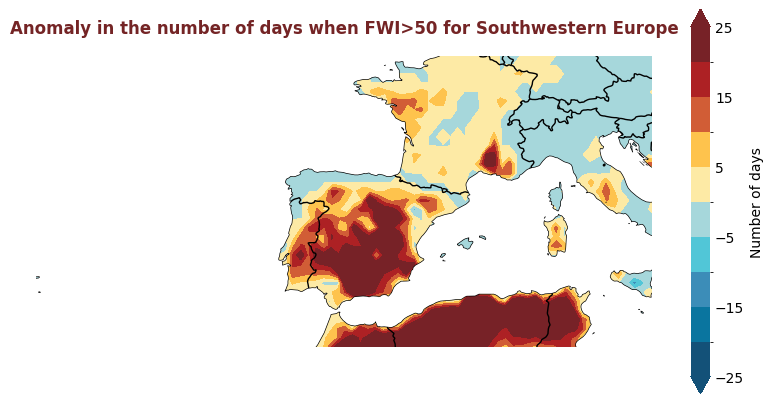

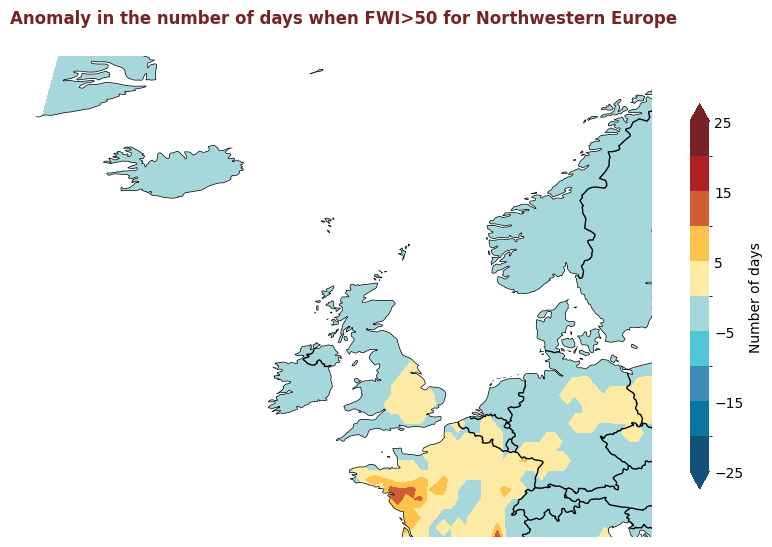

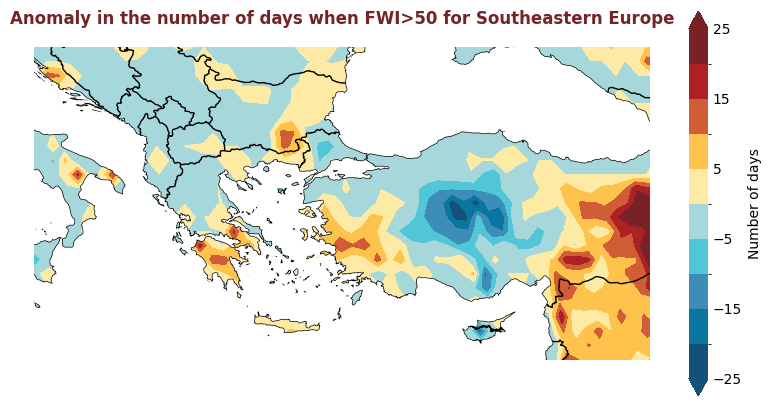

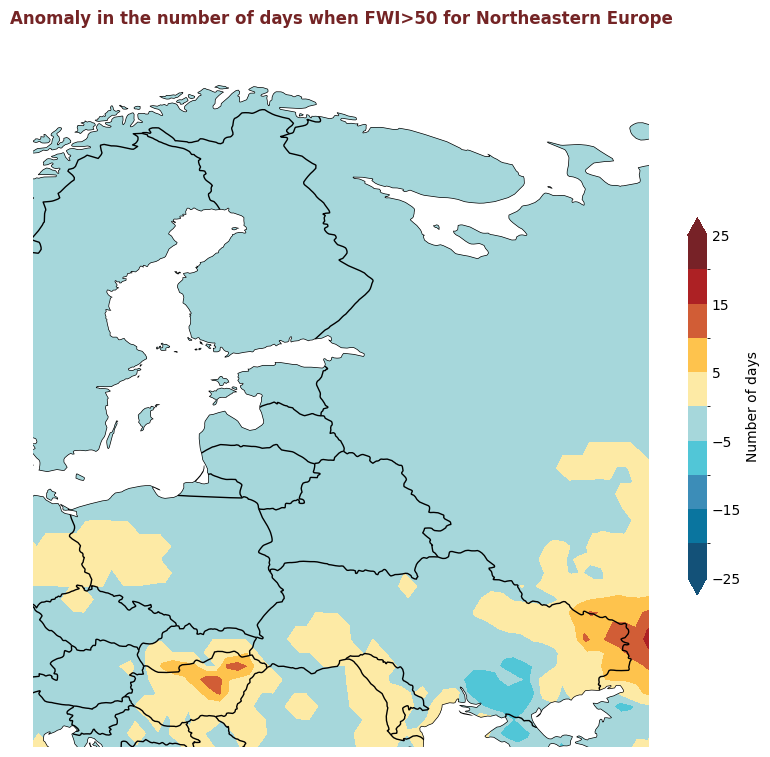

In this notebook we calculate and plot the diffrence between number of days when FWI>50 in 2022 and mean number between 1991-2020. The data can be found in Fire danger indices historical data from the Copernicus Emergency Management Service

For this example, we are using the following selection on the download page:

Product Type : ‘Reanalysis’,

Variable : ‘Fire Weather Index’,

System Version : ‘4.1’,

Dataset Type : ‘Consolidated Dataset’,

Year : ‘2015-2022’,

Day : ‘1-31’,

Grid : ‘0.5/0.5’,

Format : ‘netcdf’,

After filling out the download form, select “Show API request”. This will reveal a block of code, which you can simply copy and paste into a cell of your Jupyter Notebook. One important thing before being able to download the request, is to accept the Terms of use in the cell just before the API request. Having double checked your request, and accepted the terms of use, your request should be similar to that shown in the cell below. Please note we have added some additional lines which ensure that we do not repeat the download if we have already downloaded the file and include the option to provide the cdsapi credentials when we start the Client.

# if you're not changing the request, only need to be run once

# Choose the name and location of the donwload file

download_file = f"{DATADIR}/download_*.nc"

c = cdsapi.Client()

# The CDS request based on your selections for this example:

for year in range(1991, 2023) :

if not os.path.isfile(f'{DATADIR}/download_{year}.nc'):

c.retrieve(

'cems-fire-historical-v1',

{

'product_type': 'reanalysis',

'variable': 'fire_weather_index',

'system_version': '4_1',

'dataset_type': 'consolidated_dataset',

'year': year,

'month': [

'01', '02', '03',

'04', '05', '06',

'07', '08', '09',

'10', '11', '12',

],

'day': [

'01', '02', '03',

'04', '05', '06',

'07', '08', '09',

'10', '11', '12',

'13', '14', '15',

'16', '17', '18',

'19', '20', '21',

'22', '23', '24',

'25', '26', '27',

'28', '29', '30',

'31',

],

'grid': '0.5/0.5',

'data_format':'netcdf_legacy'

},

f'{DATADIR}/download_{year}.nc')

2024-10-14 17:17:52,815 WARNING [2024-05-15T00:00:00] **BETA version** of the new [CEMS](https://emergency.copernicus.eu/) [Early Warning Data Store (EWDS)](https://ewds.climate.copernicus.eu/). Your [feedback](https://jira.ecmwf.int/plugins/servlet/desk/portal/1/create/202) is very useful for us. **Please notice** that access to the system might suffer some disruptions due to evolving updates.

2: Calculate the ESoTC FWI metric#

Load and inspect the global data with xarray#

To ensure that we do not run out of memory, we open the data using xarray’s in-built dask functionality via the keyword chunks. In the example below xarray will only load 365 time steps into memory at any one time.

At this point we also set the longitude dimension to the range -180 to +180, the source data is provided from 0 to 360.

# For a global grid:

ds = xr.open_mfdataset(

download_file, chunks={'valid_time': 365}

)

# make sure the fields has the right longitude record from -180 to 180

ds.coords['longitude'] = (ds.coords['longitude'] + 180) % 360 - 180

ds = ds.sortby(ds.longitude)

# We only have one variable (FWI), so we convert to a dataarray for easier computation:

da = ds.fwinx

./data_dir/download_*.nc

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

/opt/homebrew/anaconda3/envs/JUPYTER-BUILD/lib/python3.12/site-packages/xarray/core/dataset.py:282: UserWarning: The specified chunks separate the stored chunks along dimension "valid_time" starting at index 365. This could degrade performance. Instead, consider rechunking after loading.

warnings.warn(

Calculate the anomaly in the number of days with Fire Weather Index > 50#

The first step is to extract the period we want to analyse (1st May to 30th September) and count the number of days per year where FWI is greater than our threshold, 50.

# Extract the summer months from the data:

da_summer = da.sel(valid_time=ds.valid_time.dt.month.isin([5,6,7,8,9]))

# Count the number of days where the FWI is greater than our threshold, i.e. 50:

fwi_threshold = 50

fwi_over_50 = da_summer.where(da_summer>fwi_threshold)

nday_fwi_over_50 = fwi_over_50.groupby('valid_time.year').count(dim = 'valid_time')

nday_fwi_over_50

<xarray.DataArray 'fwinx' (year: 32, latitude: 361, longitude: 720)> Size: 67MB

dask.array<stack, shape=(32, 361, 720), dtype=int64, chunksize=(1, 23, 45), chunktype=numpy.ndarray>

Coordinates:

surface float64 8B 0.0

* latitude (latitude) float64 3kB 90.0 89.5 89.0 88.5 ... -89.0 -89.5 -90.0

* longitude (longitude) float64 6kB -180.0 -179.5 -179.0 ... 179.0 179.5

* year (year) int64 256B 1991 1992 1993 1994 ... 2019 2020 2021 2022

Attributes: (12/30)

GRIB_paramId: 260540

GRIB_dataType: fc

GRIB_numberOfPoints: 259920

GRIB_typeOfLevel: surface

GRIB_stepUnits: 1

GRIB_stepType: instant

... ...

GRIB_name: Forest fire weather index (as d...

GRIB_shortName: fwinx

GRIB_units: Numeric

long_name: Forest fire weather index (as d...

units: Numeric

standard_name: unknownThen we calculate a climatology for the period 1991 to 2020 and use that to calculate the anomaly for 2020

# Select year 1991 to 2020 and calculate the mean for each gridcell

nday_fwi_over_50_clim = nday_fwi_over_50.sel(year=slice(1991,2020)).mean(dim='year')

# Subtract the mean from the data for 2022

nday_fwi_over_50_anomaly_2022 = nday_fwi_over_50.sel(year=2022) - nday_fwi_over_50_clim

nday_fwi_over_50_anomaly_2022

<xarray.DataArray 'fwinx' (latitude: 361, longitude: 720)> Size: 2MB

dask.array<sub, shape=(361, 720), dtype=float64, chunksize=(23, 45), chunktype=numpy.ndarray>

Coordinates:

surface float64 8B 0.0

* latitude (latitude) float64 3kB 90.0 89.5 89.0 88.5 ... -89.0 -89.5 -90.0

* longitude (longitude) float64 6kB -180.0 -179.5 -179.0 ... 179.0 179.5

year int64 8B 20223. Plot the data as maps#

First we define a plotting function that we will use throughout this section

# Colour scale options for the normal and masked version of the plotting method

# They are identical except for the grey region between -1 and +1 in the masked version

COLOURS = {

"normal": ['#135178', '#0a759f', '#3c8db8', '#51c6d7', '#a6d7db', '#fdeaa5', '#fec34d', '#d15d36', '#ad2124', '#772227'],

"masked": ['#135178', '#0a759f', '#3c8db8', '#51c6d7', '#a6d7db','grey', '#fdeaa5', '#fec34d', '#d15d36', '#ad2124', '#772227'],

}

BOUNDS = {

"normal": [-25, -20, -15, -10, -5, 0, 5, 10, 15, 20, 25],

"masked": [-25, -20, -15, -10, -5, -1, 1, 5, 10, 15, 20, 25],

}

CBAR_TICKS = {

"normal": [-25, -15, -5, 5, 15, 25],

"masked": [-25, -15, -5, -1, 1, 5, 15, 25],

}

def produce_map_plot(

da, # Data array to plot

title = "Anomaly in the number of days when FWI>50",

cbar_title = "Number of days",

borders=True, projection = ccrs.Robinson(),

extent = None, figsize=(10,10),

style = "normal",

):

# Create a plotting axis

plt.figure(figsize=figsize, dpi=100)

ax = plt.axes(projection=projection)

# Pick the style options

colours = COLOURS[style]

bounds = BOUNDS[style]

cbar_ticks = CBAR_TICKS[style]

under_colour = colours[0]

over_colour = colours[-1]

# Create the custom colormap from the selected options

my_cmap = (colors.ListedColormap(colours))

my_cmap.set_over(over_colour)

my_cmap.set_under(under_colour)

# Specify the number of discrete levels to use in the colormap

norm = colors.BoundaryNorm(bounds, my_cmap.N)

# Plot data with contours and shading

img = da.plot.contourf(

ax=ax, transform=ccrs.PlateCarree(), cmap=my_cmap,

norm=norm, levels=len(bounds), extend='both',

add_colorbar=False, add_labels=False

)

# Add a colour bar

if cbar_ticks is None:

cbar_ticks = bounds[::2]

cbar = plt.colorbar(img, ax=ax, shrink=0.5, ticks=cbar_ticks)

cbar.set_label(cbar_title)

cbar.outline.set_visible(False)

cbar.ax.tick_params(size=0, labelsize=10)

# Add additional features to the plots

ax.coastlines() # Add coastlines

ax.set_frame_on(False) # remove frame

# Add borders if requested

if borders:

ax.add_feature(cart.feature.BORDERS, edgecolor='black', lw=1)

# Set map extent

if extent is not None:

ax.set_extent(extent, ccrs.PlateCarree())

# cover with white oceans:

ax.add_feature(cart.feature.OCEAN, color='white', lw=0, zorder=100)

# Add title

plt.title(title, fontsize=12, fontweight='bold', y=1.05, color='#742425')

plt.tight_layout()

# Option to save, but not used

#plt.savefig('./fwi_anom_europe_v_nowhite.tiff', format='tiff',facecolor='white')

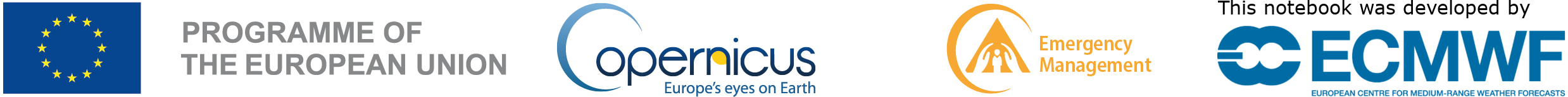

We then use this function to produce a global plot of the anomaly for 2022. Note that we mask areas where the anomaly is in between -1 and 1 to highlight the regions of interest.

produce_map_plot(nday_fwi_over_50_anomaly_2022, borders=False, style="masked")

The figure above displays the 2020 anomaly, with respect to a 2015-2019 reference period, for the number of days where the FWI is considered high, i.e. >50. Red indicates where there were more days with high FWI in 2020 than the refence period, and blue indicates that there were fewer days with high FWI.

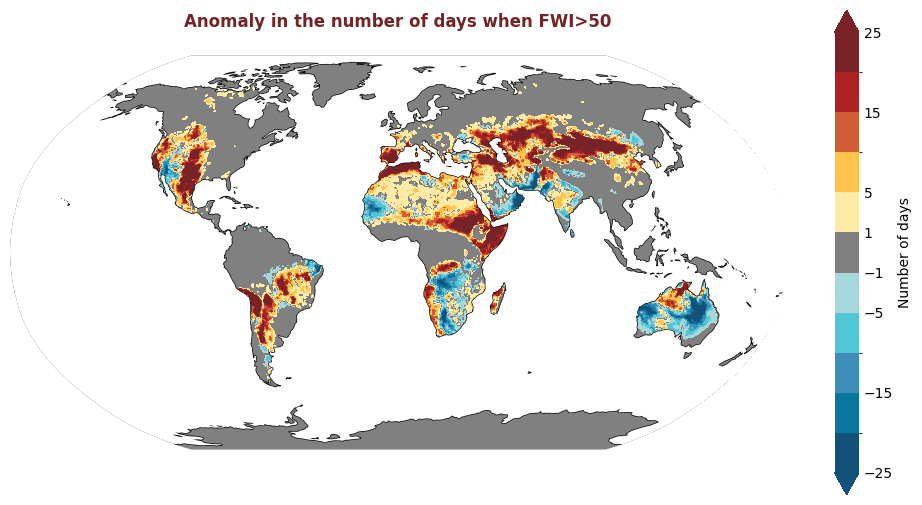

Produce maps for each of the ESOTC Regions#

ESOTC Regions

Europe: 25°W–40°E, 34°–72°N

Southwestern Europe: 25°W–15°E, 34°–45°N

Northwestern Europe: 25°W–15°E, 45°–72°N

Southeastern Europe: 15°–40°E, 34°–45°N

Northeastern Europe: 15°–40°E, 45°–72°N

Central Europe: 2°–24°E, 45°–55°N

esotc_regions = {

'Europe':{'extent': [-25,40,34,72], 'limits':[0,20]},

'Southwestern Europe':{'extent': [-25,15,34,50], 'limits':[0,45]},

'Northwestern Europe':{'extent': [-25,15,45,72], 'limits':[0,20]},

'Southeastern Europe':{'extent': [15,40,34,45], 'limits':[0,45]},

'Northeastern Europe':{'extent': [15,40,45,72], 'limits':[0,20]},

}

# Take a subset of data to improve plotting speed

europe_data = nday_fwi_over_50_anomaly_2022.sel(

latitude=slice(80, 25), longitude=slice(-30,80)

)

for region, config in esotc_regions.items():

extent = config.get('extent')

# figsize = config.get('figsize', (8,8))

produce_map_plot(

europe_data,

title = "Anomaly in the number of days when FWI>50 for " + region,

extent = extent, figsize=(8,8)

)

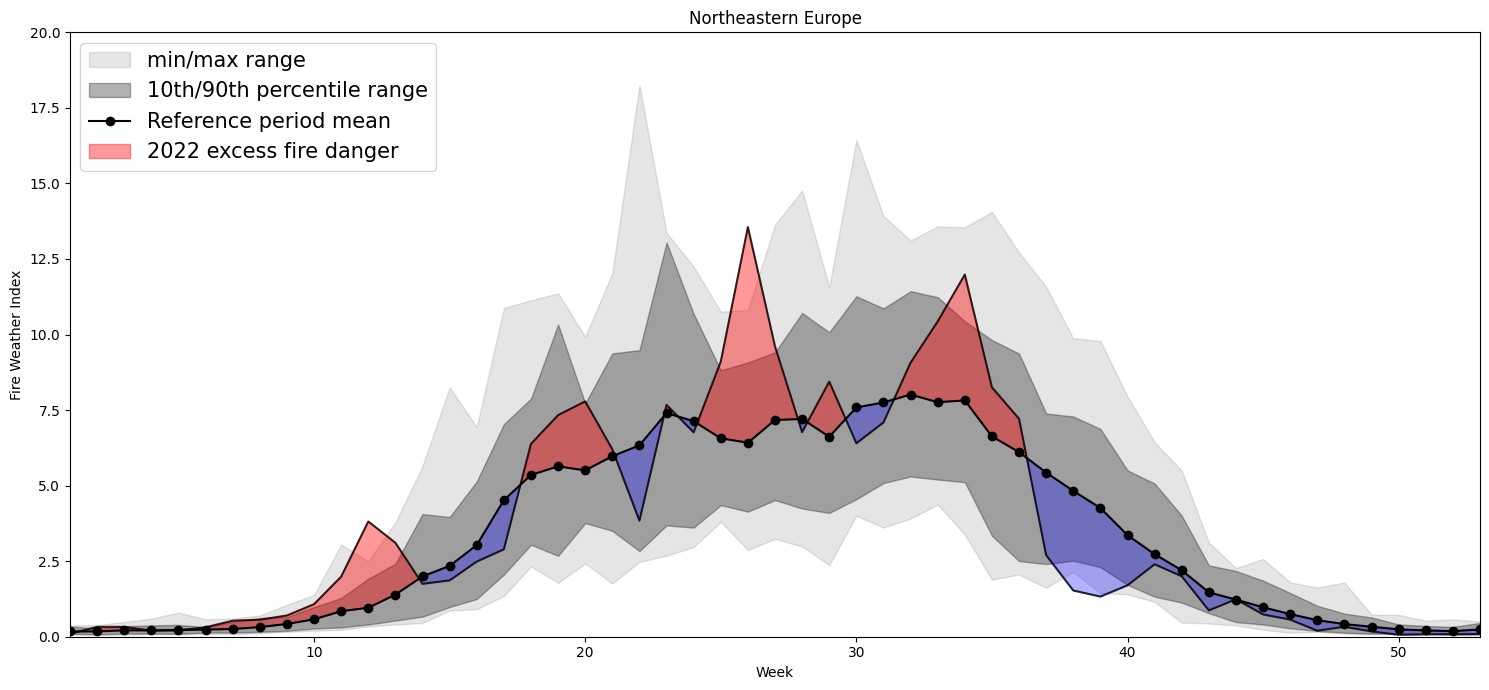

Plotting Timeseries of Weekly#

2022 vs 1991-2020 as Climate#

for region, config in esotc_regions.items():

extent = config.get('extent')

limits = config.get('limits')

print(region)

lon1=extent[0]

lon2=extent[1]

lat2=extent[2]

lat1=extent[3]

cymin=limits[0]

cymax=limits[1]

small = ds.sel(latitude=slice(lat1,lat2), longitude=slice(lon1,lon2))

# Clima

clim= small.sel(valid_time=slice('1991-01-01','2020-12-31')).mean(dim=['longitude','latitude'])

weekly_clim= clim.resample(valid_time='W').mean(dim='valid_time')

weekly_clim=weekly_clim.chunk({'valid_time':-1})

w_clim_mean=weekly_clim.groupby(weekly_clim['valid_time'].dt.isocalendar().week).mean()#.load()

w_clim_max=weekly_clim.groupby(weekly_clim['valid_time'].dt.isocalendar().week).max()#.load()

w_clim_min=weekly_clim.groupby(weekly_clim['valid_time'].dt.isocalendar().week).min()#.load()

w_clim_q10=weekly_clim.groupby(weekly_clim['valid_time'].dt.isocalendar().week).quantile(0.1)#.load()

w_clim_q90=weekly_clim.groupby(weekly_clim['valid_time'].dt.isocalendar().week).quantile(0.9)#.load()

# this year

ty= small.sel(valid_time=slice('2022-01-01','2022-12-31')).mean(dim=['longitude','latitude'])

weekly_ty= ty.resample(valid_time='W').mean(dim='valid_time')

weekly_ty=weekly_ty.chunk({'valid_time':-1})

w_ty_mean=weekly_ty.groupby(weekly_ty['valid_time'].dt.isocalendar().week).mean()#.load()

# variables for plotting

x=w_clim_max['week'].values

xdate = "2022-W26"

r = dt.datetime.strptime(xdate + '-1', "%Y-W%W-%w")

ymean=w_clim_mean.fwinx.values

ymin=w_clim_min.fwinx.values

ymax=w_clim_max.fwinx.values

y10=w_clim_q10.fwinx.values

y90=w_clim_q90.fwinx.values

tymean=w_ty_mean.fwinx.values

# this only needed if odd number of weeks

tymean=np.append(tymean,tymean[51])

fig, ax = plt.subplots(1, figsize=(15, 7),dpi=100)

ax.fill_between(x, ymax, ymin,

color="k",

alpha=0.1,

label='min/max range')

ax.fill_between(x, y10, y90,

color="k",

alpha=0.3,

label='10th/90th percentile range')

ax.plot(x, ymean, 'o-',

color="k",

label="Reference period mean")

ax.fill_between(x, ymean,tymean,

color="r",

alpha=0.4,

where= tymean>= ymean,

interpolate=True,

label="2022 excess fire danger")

ax.fill_between(x, ymean,tymean,

color="b",

alpha=0.3,

where= tymean <= ymean,

interpolate=True)

ax.plot(x, tymean, '-', color='black',alpha=0.8)

ax.legend(loc='upper left',fontsize=15)

plt.ylim(ymax=cymax,ymin=cymin) # this line

plt.xlim(xmax=53,xmin=1)

plt.title(region)

ax.set_xlabel('Week')

ax.set_ylabel('Fire Weather Index')

plt.tight_layout()

# plt.savefig(f'{region}_weeklyTS.tiff', format='tiff',facecolor='white')

Europe

Southwestern Europe

Northwestern Europe

Southeastern Europe

Northeastern Europe