5.1.5. Uncertainty in projected changes of energy consumption in Europe#

Production date: 23-05-2024

Produced by: CMCC foundation - Euro-Mediterranean Center on Climate Change. Albert Martinez Boti.

🌍 Use case: Assessing possible impacts of climate change on energy demand in Europe#

❓ Quality assessment question#

What are the projected future changes and associated uncertainties of Energy Degree Days in Europe?

Sectors affected by climate change are varied including agriculture [1], forest ecosystems [2], and energy consumption [3]. Under projected future global warming over Europe [4][5], the current increase in energy demand is expected to persist until the end of this century and beyond [6]. Identifying which climate-change-related impacts are likely to increase, by how much, and inherent regional patterns, is important for any effective strategy for managing future climate risks. This notebook utilises data from a subset of models from CMIP6 Global Climate Models (GCMs) and explores the uncertainty in future projections of energy-consumption-related indices by considering the ensemble inter-model spread of projected changes. Two energy-consumption-related indices are calculated from daily mean temperatures using the icclim Python package: Cooling Degree Days (CDDs) and Heating Degree Days (HDDs). Degree days measure how much warmer or colder it is compared to standard temperatures (usually 15.5°C for heating and 22°C for cooling). Higher degree day numbers indicate more extreme temperatures, which typically lead to increased energy use for heating or cooling buildings. In the presented code, CDD calculations use summer aggregation (CDD22), while HDD calculations focus on winter (HDD15.5), presenting results as daily averages rather than cumulative values. Within this notebook, these calculations are performed over the future period from 2016 to 2099, following the Shared Socioeconomic Pathways SSP5-8.5. It is important to note that the results presented here pertain to a specific subset of the CMIP6 ensemble and may not be generalisable to the entire dataset. Also note that a separate assessment examines the representation of climatology and trends of these indices for the same models during the historical period (1971-2000), while another assessment looks at the projected climate signal of these indices for the same models at a 2°C Global Warming Level.

📢 Quality assessment statement#

These are the key outcomes of this assessment

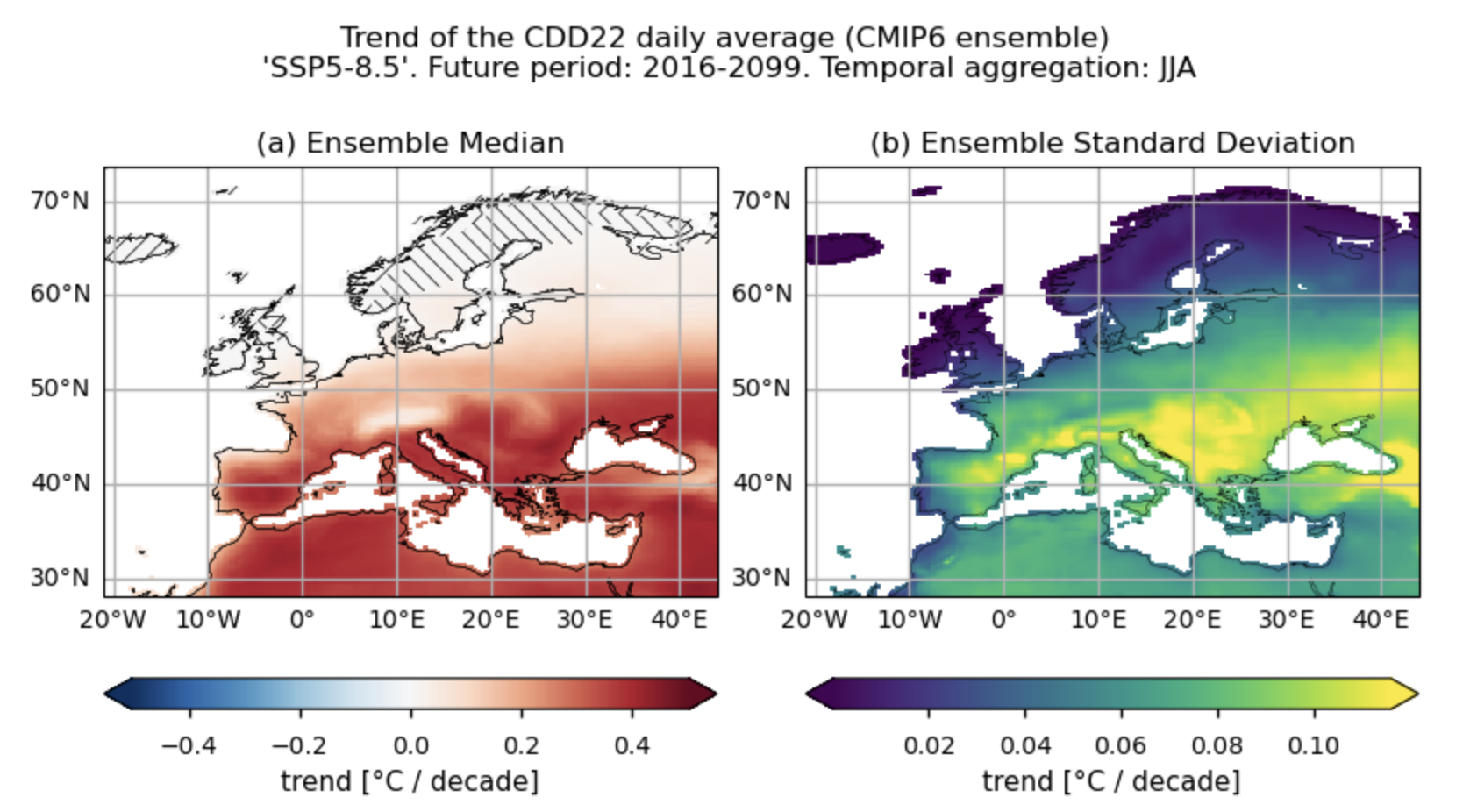

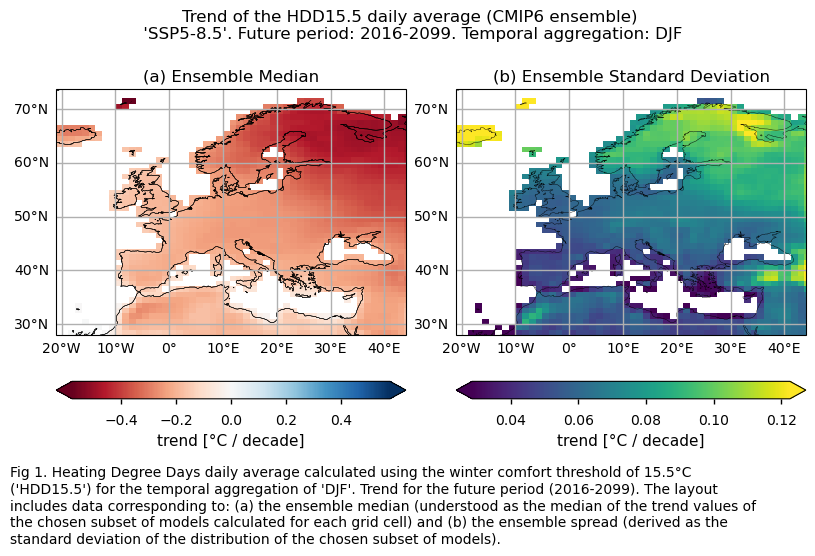

The subset of considered CMIP6 models agree on a general decrease in future trends (2016-2099) for HDD15.5 across Europe during DJF and an increase in CDD22 during JJA. However, regional variations exist.

The northern and eastern parts of Europe are projected to experience the largest decrease in HDD15.5, accompanied by higher inter-model variability. Some areas show no trend for CDD2 due to threshold temperatures not being reached. The Mediterranean Basin will see the greatest increase in CDD22, with higher inter-model variability.

The findings of this notebook could support decisions sensitive to future energy demand. Despite regional variations and some inter-model spread (calculated to account for projected uncertainty), the subset of 16 models from CMIP6 agree on a significant decrease in the energy required for heating spaces during winter. This decrease is particularly notable in regions with high HDD (northern and eastern regions that experience substantial heating energy consumption in winter). Conversely, more energy will be needed in the future to cool buildings during summer, especially in the Mediterranean Basin.

Fig. 5.1.5.1 Cooling Degree Days daily average calculated using the summer comfort threshold of 22°C (‘CDD22) for the temporal aggregation of ‘JA’. Trend for the future period (2016-2099). The layout includes data corresponding to: (a) the ensemble median (understood as the median of the trend values of the chosen subset of models calculated for each grid cell) and (b) the ensemble spread (derived as the standard deviation of the distribution of the chosen subset of models).#

📋 Methodology#

The reference methodology used here for the indices calculation is similar to the one followed by Scoccimarro et al., (2023) [7]. However the thermal comfort thresholds used in this notebook are slightly different. A winter comfort temperature of 15.5°C and a summer comfort temperature of 22.0°C are used here (as in the CDS application). In the presented code, the CDD calculations are based on the JJA aggregation, with a comfort temperature of 22°C (CDD22), while HDD calculations focus on winter (DJF) with a comfort temperature of 15.5°C (HDD15.5). More specifically, to calculate CDD22, the sum of the differences between the daily mean temperature and the thermal comfort temperature of 22°C is computed. This calculation occurs only when the mean temperature is above the thermal comfort level; otherwise, the CDD22 for that day is set to 0. For example, a day with a mean temperature of 28°C would result in 6°C. Two consecutive hot days like this would total 12°C over the two-day period. Similarly, to calculate HDD15.5, the sum of the differences between the thermal comfort temperature of 15.5°C and the daily mean temperature is determined. This happens only when the mean temperature is below the thermal comfort level; otherwise, the HDD15.5 for that day is set to 0. Finally, to obtain more intuitive values, the sum is averaged over the number of days in the season to produce daily average values. This approach differs from the CDS application, where both the sum over a period and the daily average values can be displayed. In Spinoni et al., (2018) [6], as well as in the CDS application, more advanced methods for calculating CDD and HDD involve considering maximum, minimum, and mean temperatures. However, to prevent overloading the notebook and maintain simplicity while ensuring compatibility with the icclim Python package, we opted to utilise a single variable (2m mean temperature).

This notebook offers an assessment of the projected changes and their associated uncertainties using a subset of 16 models from CMIP6. The uncertainty is examined by analysing the ensemble inter-model spread of projected changes for the energy-consumption-related indices ‘HDD15.5’ and ‘CDD22’, calculated for the future period spanning from 2016 to 2099. In particular, spatial patterns of climate projected trends are examined and displayed for each model individually and for the ensemble median (calculated for each grid cell), alongside the ensemble inter-model spread to account for projected uncertainty. Additionally, spatially-averaged trend values are analysed and presented using box plots to provide an overview of trend behavior across the distribution of the chosen subset of models when averaged across Europe.

The analysis and results follow the next outline:

1. Parameters, requests and functions definition

📈 Analysis and results#

1. Parameters, requests and functions definition#

1.1. Import packages#

1.2. Define Parameters#

In the “Define Parameters” section, various customisable options for the notebook are specified. Most of the parameters chosen are the same as those used in another assessment (“CMIP6 Climate Projections: evaluating biases in energy-consumption-related indices in Europe”), being them:

The initial and ending year used for the future projections period can be specified by changing the parameters

year_startandyear_stop(2016-2099 is chosen).index_timeseriesis a dictionary that set the temporal aggregation for every index considered within this notebook (‘HDD15.5’ and ‘CDD22’). In the presented code, the CDD calculations are always based on the JJA aggregation, with a comfort temperature of 22°C (CDD22), while HDD calculations focus on winter (DJF) with a comfort temperature of 15.5°C (HDD15.5).collection_idset the family of models. Only CMIP6 is implemented for this sub-notebook.areaallows specifying the geographical domain of interest.The

interpolation_methodparameter allows selecting the interpolation method when regridding is performed over the indices.The

chunkselection allows the user to define if dividing into chunks when downloading the data on their local machine. Although it does not significantly affect the analysis, it is recommended to keep the default value for optimal performance.

1.3. Define models#

The following climate analyses are performed considering a subset of GCMs from CMIP6. Models names are listed in the parameters below. Some variable-dependent parameters are also selected.

The selected CMIP6 models have available both the historical and SSP8.5 experiments, and they are the same as those used in other assessments (“CMIP6 Climate Projections: evaluating biases in energy-consumption-related indices in Europe”).

1.4. Define land-sea mask request#

Within this sub-notebook, ERA5 will be used to download the land-sea mask when plotting. In this section, we set the required parameters for the cds-api data-request of ERA5 land-sea mask.

1.5. Define model requests#

In this section we set the required parameters for the cds-api data-request.

When weights = True, spatial weighting is applied for calculations requiring spatial data aggregation. This is particularly relevant for CMIP6 GCMs with regular lon-lat grids that do not consider varying surface extensions at different latitudes.

1.6. Functions to cache#

In this section, functions that will be executed in the caching phase are defined. Caching is the process of storing copies of files in a temporary storage location, so that they can be accessed more quickly. This process also checks if the user has already downloaded a file, avoiding redundant downloads.

Functions description:

The

select_timeseriesfunction subsets the dataset based on the chosentimeseriesparameter.The

compute_indicesfunction utilises the icclim package to calculate the energy-consumption-related indices.The

compute_trendsfunction employs the Mann-Kendall test for trend calculation.Finally, the

compute_indices_and_trendsfunction calculates the energy consumption-related indices for the corresponding temporal aggregation using thecompute_indicesfunction, determines the indices mean for the future period (2016-2099), obtain the trends using thecompute_trendsfunction, and offers an option for regridding tomodel_regrid.

2. Downloading and processing#

2.1. Download and transform the regridding model#

In this section, the download.download_and_transform function from the ‘c3s_eqc_automatic_quality_control’ package is employed to download daily data from the selected CMIP6 regridding model, compute the energy-consumption-related indices for the selected temporal aggregation (“DJF” for HDD and “JJA” for CDD), calculate the mean and trend over the future projections period (2016-2099), and cache the result (to avoid redundant downloads and processing).

The regridding model is intended here as the model whose grid will be used to interpolate the others. This ensures all models share a common grid, facilitating the calculation of median values for each cell point. The regridding model within this notebook is “gfdl_esm4” but a different one can be selected by just modifying the model_regrid parameter at 1.3. Define models. It is key to highlight the importance of the chosen target grid depending on the specific application.

2.2. Download and transform models#

In this section, the download.download_and_transform function from the ‘c3s_eqc_automatic_quality_control’ package is employed to download daily data from the CMIP6 models, compute the energy-consumption-related indices for the selected temporal aggregation (“DJF” for HDD and “JJA” for CDD), calculate the mean and trend over the future period (2016-2099), interpolate to the regridding model’s grid (only for the cases in which it is specified, in the other cases, the original model’s grid is mantained), and cache the result (to avoid redundant downloads and processing).

model='access_cm2'

model='awi_cm_1_1_mr'

model='cmcc_esm2'

model='cnrm_cm6_1_hr'

model='cnrm_esm2_1'

model='ec_earth3_cc'

model='gfdl_esm4'

model='inm_cm4_8'

model='inm_cm5_0'

model='kiost_esm'

model='mpi_esm1_2_lr'

model='miroc6'

model='miroc_es2l'

model='mri_esm2_0'

model='noresm2_mm'

model='nesm3'

2.3. Apply land-sea mask, change attributes and cut the region to show#

This section performs the following tasks:

Cut the region of interest.

Downloads the sea mask for ERA5.

Regrids ERA5’s mask to the

model_regridgrid and applies it to the regridded dataRegrids the ERA5 land-sea mask to the model’s original grid and applies it to them.

Change some variable attributes for plotting purposes.

Note: ds_interpolated contains data from the models regridded to the regridding model’s grid. model_datasets contain the same data but in the original grid of each model.

3. Plot and describe results#

This section will display the following results:

Maps representing the spatial distribution of the future trends (2016-2099) of the indices ‘HDD15.5’ and ‘CDD22’ for each model individually, the ensemble median (understood as the median of the trend values of the chosen subset of models calculated for each grid cell), and the ensemble spread (derived as the standard deviation of the distribution of the chosen subset of models).

Boxplots which represent statistical distributions (PDF) built on the spatially-averaged future trend from each considered model.

3.1. Define plotting functions#

The functions presented here are used to plot the trends calculated over the future period (2016-2099) for each of the indices (‘HDD15.5’ and ‘CDD22’).

For a selected index, two layout types will be displayed, depending on the chosen function:

Layout including the ensemble median and the ensemble spread for the trend:

plot_ensemble()is used.Layout including every model trend:

plot_models()is employed.

trend==True allows displaying trend values over the future period, while trend==False show mean values. In this notebook, which focuses on the future period, only trend values will be shown, and, consequently, trend==True. When the trend argument is set to True, regions with no significance are hatched. For individual models, a grid point is considered to have a statistically significant trend when the p-value is lower than 0.05 (in such cases, no hatching is shown). However, for determining trend significance for the ensemble median (understood as the median of the trend values of the chosen subset of models calculated for each grid cell), reliance is placed on agreement categories, following the advanced approach proposed in AR6 IPCC on pages 1945-1950. The hatch_p_value_ensemble() function is used to distinguish, for each grid point, between three possible cases:

If more than 66% of the models are statistically significant (p-value < 0.05) and more than 80% of the models share the same sign, we consider the ensemble median trend to be statistically significant, and there is agreement on the sign. To represent this, no hatching is used.

If less than 66% of the models are statistically significant, regardless of agreement on the sign of the trend, hatching is applied (indicating that the ensemble median trend is not statistically significant).

If more than 66% of the models are statistically significant but less than 80% of the models share the same sign, we consider the ensemble median trend to be statistically significant, but there is no agreement on the sign of the trend. This is represented using crosses.

The colorbar chosen to represent trends for the HDD15.5 index spans from red to blue. In this color scheme, negative trend values (shown in red) indicate a decrease in Heating Degree Days over time. Conversely, blueish colors indicate an increase in Heating Degree Days over time. This selection is based on the rationale that more Heating Degree Days are associated with colder conditions, typically represented by blueish colors, while fewer Heating Degree Days indicate warmer conditions, depicted by reddish colors.

3.2. Plot ensemble maps#

In this section, we invoke the plot_ensemble() function to visualise the trend calculated over the future period (2016-2099) for the model ensemble across Europe. Note that the model data used in this section has previously been interpolated to the “regridding model” grid ("gfdl_esm4" for this notebook).

Specifically, for each of the indices (‘HDD15.5’ and ‘CDD22’), this section presents a single layout including trend values of the future period (2016-2099) for: (a) the ensemble median (understood as the median of the trend values of the chosen subset of models calculated for each grid cell) and (b) the ensemble spread (derived as the standard deviation of the distribution of the chosen subset of models).

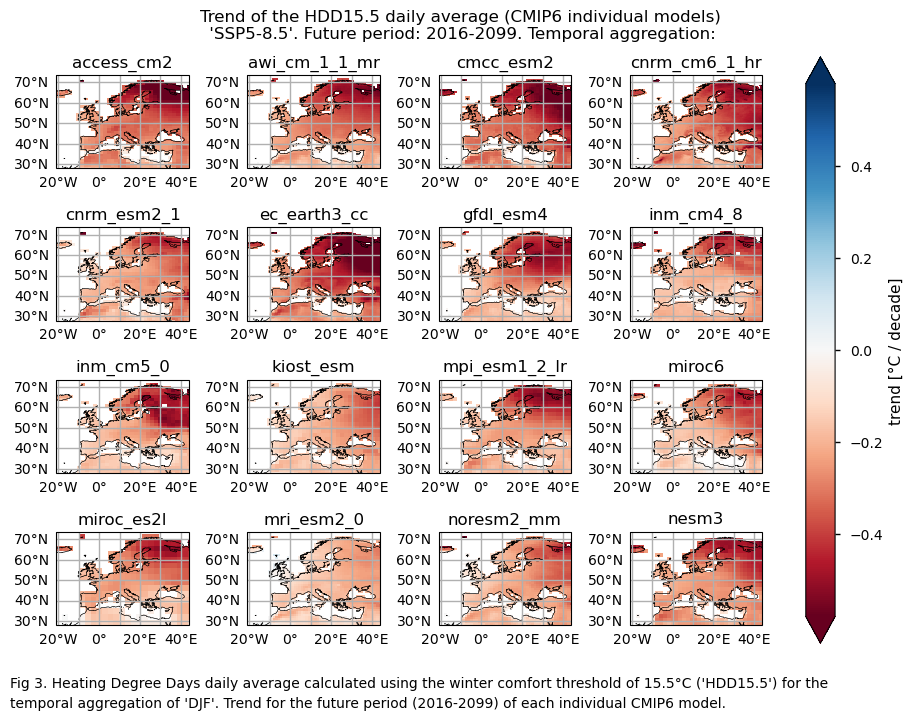

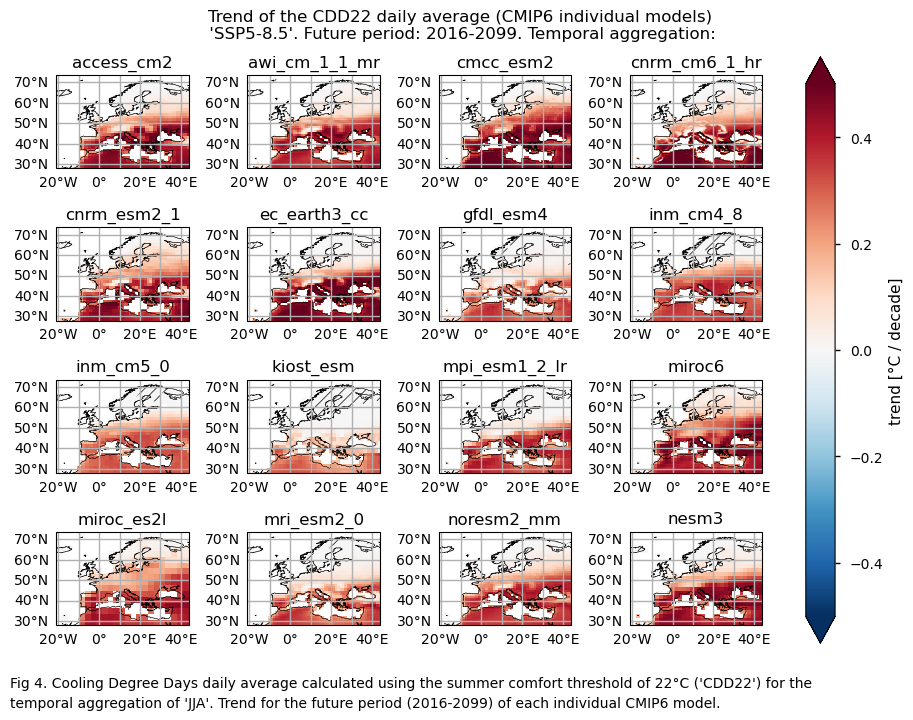

3.3. Plot model maps#

In this section, we invoke the plot_models() function to visualise the trend calculated over the future period (2016-2099) for every model individually across Europe. Note that the model data used in this section maintains its original grid.

Specifically, for each of the indices (‘HDD15.5’ and ‘CDD22’), this section presents a single layout including the trend for the future period (2016-2099) of every model.

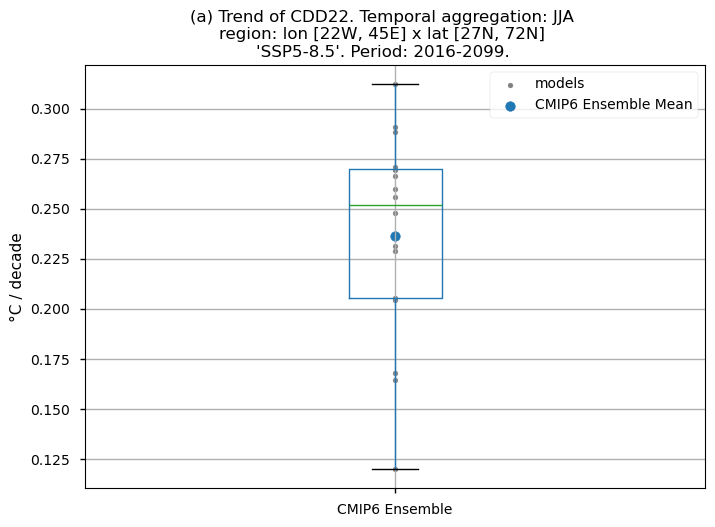

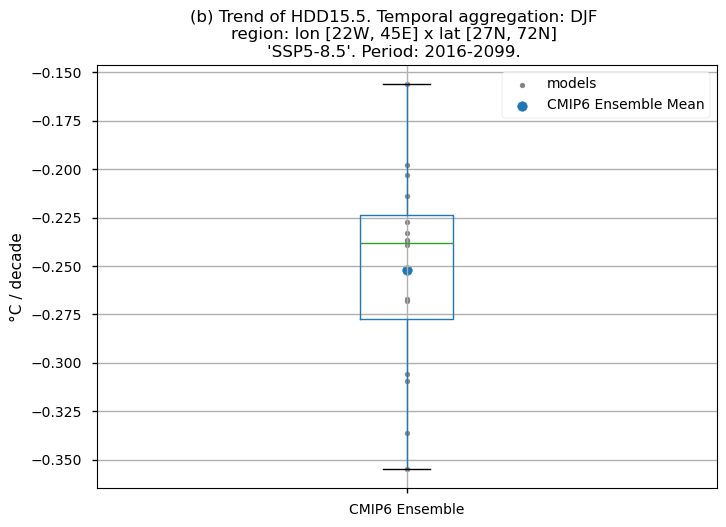

3.4. Boxplots of the future trend#

Finally, we present boxplots representing the ensemble distribution of each climate model trend calculated over the future period (2016-2099) across Europe.

Dots represent the spatially-averaged future trend over the selected region (change of the number of days per decade) for each model (grey) and the ensemble mean (blue). The ensemble median is shown as a green line. Note that the spatially averaged values are calculated for each model from its original grid (i.e., no interpolated data has been used here).

The boxplot visually illustrates the distribution of trends among the climate models, with the box covering the first quartile (Q1 = 25th percentile) to the third quartile (Q3 = 75th percentile), and a green line indicating the ensemble median (Q2 = 50th percentile). Whiskers extend from the edges of the box to show the full data range.

Fig 5. Boxplots illustrating the future trends of the distribution of the chosen subset of models for the Energy Degree Days indices daily averaged: (a) 'CDD22', and (b) 'HDD15.5'. The distribution is created by considering spatially averaged trends across Europe. The ensemble mean and the ensemble median trends are both included. Outliers in the distribution are denoted by a grey circle with a black contour.

3.5. Results summary and discussion#

Trends calculated for the future period (2016-2099) exhibit a general decrease in Heating Degree Days (HDD15.5) across Europe for DJF, especially in the northern and central-eastern regions where winter Heating Degree Days are typically higher. For Cooling Degree Days (CDD22) trends calculated for summer in Europe, an increase can be observed, particularly in the southern half and central regions. However, no trend is observed for some northern regions and certain mountain areas, possibly due to the threshold temperature of 22°C being too high to be reached across these regions.

The boxplots illustrate a decrease in Heating Degree Days (HDD15.5) across Europe for DJF during the future period from 2016 to 2099. The ensemble median trend reaches a daily average value near -0.23 °C per decade, which is a larger decrease than that captured by ERA5 for the historical period (around -0.15 °C per decade). The interquantile range of the ensemble ranges from -0.275 to around -0.225 °C per decade.

The boxplot analysis reveals an increase in Cooling Degree Days (CDD22) across Europe for JJA, with an ensemble median trend value near 0.25 °C per decade and an interquantile range that spans approximately from 0.20 to 0.27 °C per decade. This increase is again larger than that reflected by the ERA5 calculations over the historical period (which is around 0.11°C/decade).

What do the results mean for users? Are the biases relevant?

The projected decrease in Heating Degree Days during winter and the projected increase in Cooling Degree Days during summer provide valuable information for decisions sensitive to future energy demand. However, it is crucial to consider the biases identified during the historical period (1971-2000) in the assessment “CMIP6 Climate Projections: evaluating biases in energy-consumption-related indices in Europe”. Depending on the region, these biases can either enhance or diminish confidence in interpreting the assessment results. The inter-model spread, which is used to account for projected uncertainties, should also be taken into account, as these uncertainties can lead to misinterpretations regarding the magnitude of projected increases or decreases.

It is important to note that the results presented are specific to the 16 models chosen, and users should aim to assess as wide a range of models as possible before making a sub-selection.

ℹ️ If you want to know more#

Key resources#

Some key resources and further reading were linked throughout this assessment.

The CDS catalogue entries for the data used were:

CMIP6 climate projections (Daily - air temperature): https://cds.climate.copernicus.eu/datasets/projections-cmip6?tab=overview

Code libraries used:

C3S EQC custom functions,

c3s_eqc_automatic_quality_control, prepared by B-Openicclim Python package

References#

[1] Bindi, M., Olesen, J.E. The responses of agriculture in Europe to climate change (2011). Reg Environ Change 11 (Suppl 1), 151–158. https://doi.org/10.1007/s10113-010-0173-x

[2] Lindner, M., Maroschek, M., Netherer, S., Kremer, A., Barbati, A., Garcia-Gonzalo, J., Seidi, R., Delzon, S., Corona, P., Kolstrom, M., Lexer, M.J., Marchetti, M. (2010). Climate change impacts, adaptive capacity, and vulnerability of European forest ecosystems. For. Ecol. Manage. 259(4): 698–709. https://doi.org/10.1016/j.foreco.2009.09.023

[3] Santamouris, M., Cartalis, C., Synnefa, A., Kolokotsa, D. (2015). On the impact of urban heat island and global warming on the power demand and electricity consumption of buildings – a review. Energy Build. 98: 119–124. https://doi.org/10.1016/j.enbuild.2014.09.052

[4] Jacob, D., Petersen, J., Eggert, B. et al. (2014). EURO-CORDEX: new high-resolution climate change projections for European impact research. Reg Environ Change 14, 563–578. https://doi.org/10.1007/s10113-013-0499-2

[5] IPCC. 2014. In Climate Change 2014: Synthesis Report. Contribution of Working Groups I, II and III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change, Core Writing Team, RK Pachauri, LA Meyer (eds). IPCC: Geneva, Switzerland 151 pp.

[6] Spinoni, J., Vogt, J.V., Barbosa, P., Dosio, A., McCormick, N., Bigano, A. and Füssel, H.-M. (2018). Changes of heating and cooling degree-days in Europe from 1981 to 2100. Int. J. Climatol, 38: e191-e208. https://doi.org/10.1002/joc.5362

[7] Scoccimarro, E., Cattaneo, O., Gualdi, S. et al. (2023). Country-level energy demand for cooling has increased over the past two decades. Commun Earth Environ 4, 208. https://doi.org/10.1038/s43247-023-00878-3